Identify low performance factor

Hi. First I would like to introduce myself to the forums. I've bought GSA SER a couple of days back and so far I love it

I'm not new to IM tools, and I have experience in other tools like Xrumer, Scrapebox, etc. I've done my research and I have read the FQ/tutorial threads in the forum, and I learnt a lot:

I've started 3 test projects but I'm getting not so great results, or at least not as great as I was expecting.

These are the specs of my server

Type = Dedicated server

CPUs = 4 HT (8 virtual) (Xeon)

Memory = 32 GB

HD = 2 TB RAID 1

Net = 100 Mbps

Threads = 230+

40 Semi-private Proxies

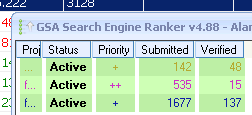

And these are my projects:

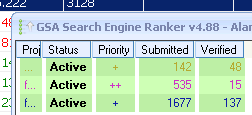

- 1st project: running for 2 hours (1 URL)

- 2nd project: running for 10 hours (13 URLs)

- 3rd project: running for 23 hours (1 URL)

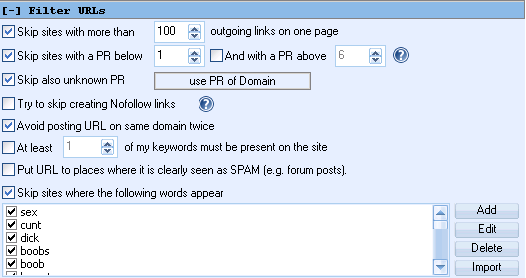

I've taken all the suggestions from the previous threads.

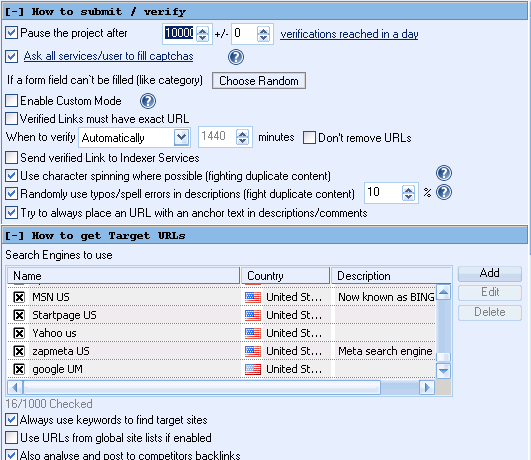

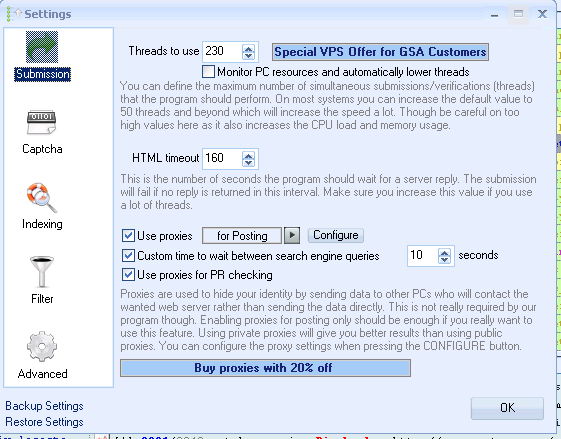

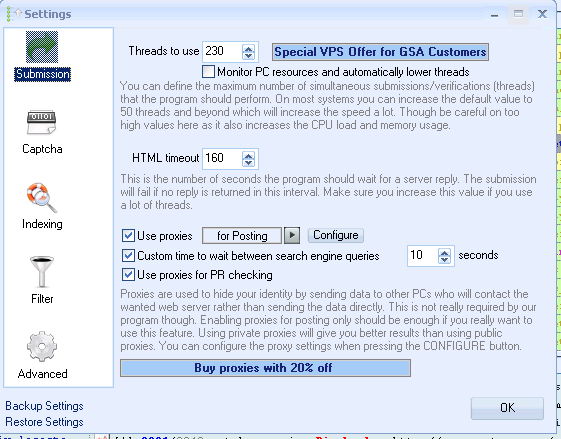

- Set threads to 230 with HTML timeout of 150.

- Using Captcha Sniper with the latest definition files.

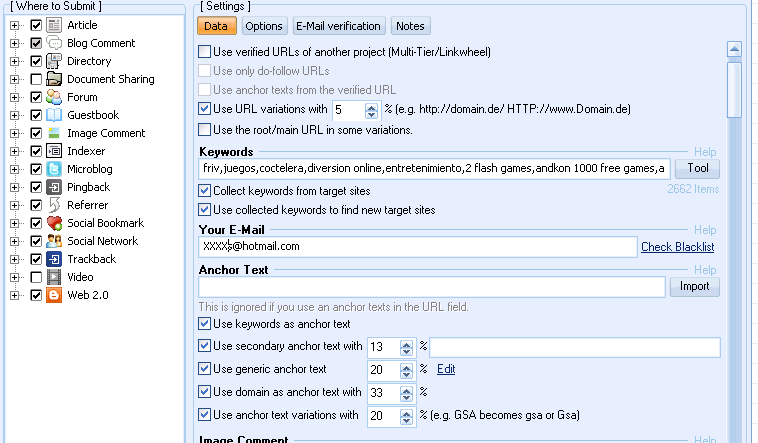

- Using a huge list of keywors (250,00+)

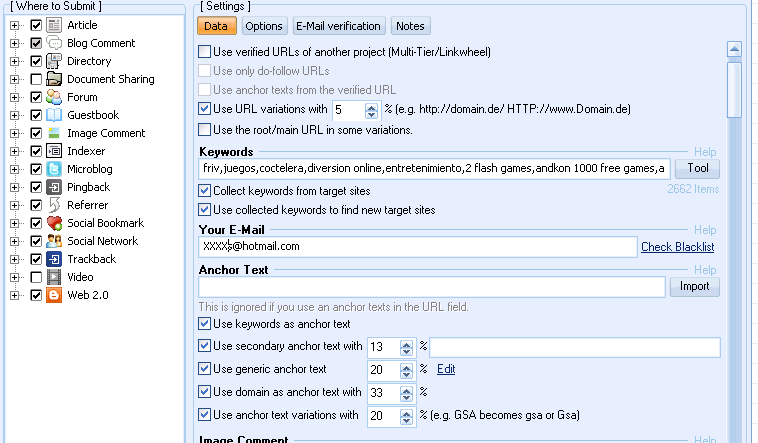

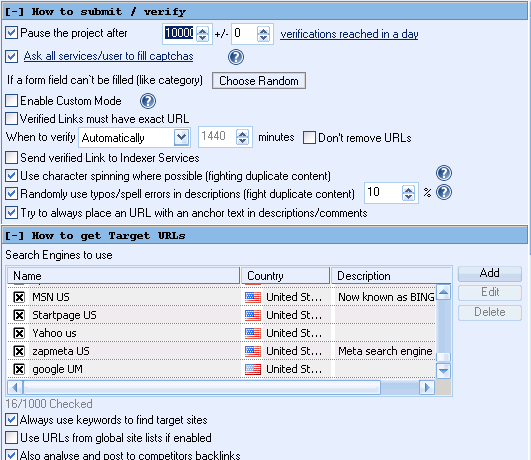

- Using only needed search engines (15 - 20 dpending of the project)

- I'm submitting links to all platforms except doc sharing sites.

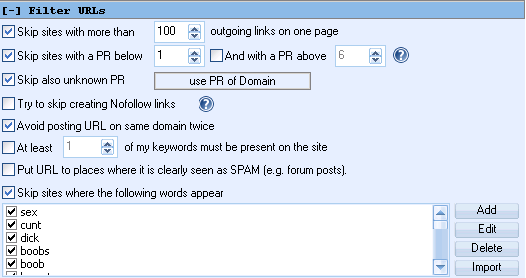

If it helps, this is how I'm setting up my projects

In the category list I'm using a list of 500 categories that I use on AMR.

What could be the reason for my poor performance? I'm in for the volume as these are low tiers. I've read that some members like @leeg are getting thousands of submissions per day. Am I right to assume that the lists are a key factor here?. I'm scraping my own lists with scrapebox and I'll try again later today.

I'd be thankful if you could help me out with this.

I don't have too much to share yet, but I'm willing to help here so if I can do anything for the community, let me know

I don't have too much to share yet, but I'm willing to help here so if I can do anything for the community, let me know

Thank you very much.

Tagged:

Comments

Welcome to the forum.

The option "always use keywords to find targets" is probably be cause of low results. Didn't you get a warning from the program when using that?

usefull for niche related platforms like 'Blog Comments'. i recommend to

either uncheck this or seperate niche related platforms in another

project because you won't find many niche related directories for

example

Real newb question coming up; if this above is unchecked how does GSA SER find target URLs? I'm worried I'll run out of target sites.