Finally all set up again I think? (I have a couple of questions)

Hello,

It's been a long time since I last used anything to do with seo. I first bought GSA tools in 2013 and had massive success with it. Since I stopped around 2017 (I think) I have ran all kinds of PPC and still do.

Anyway, I finally stopped procrastinating and got everything I need (I think) so far to get the ball rolling. What I have bought/setup so far:

I made a random blog, just to test and play around with until I figure out what I want to focus on ranking.

I think I've covered everything above so far what I've done. Thanks to @backlinkaddict & @organiccastle

for replying to my thread when I came back, it seemed like information overload but now everything is launched/setup it's not so bad afterall!

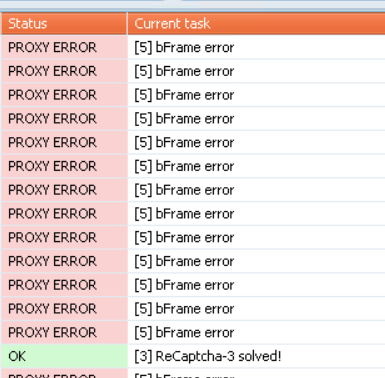

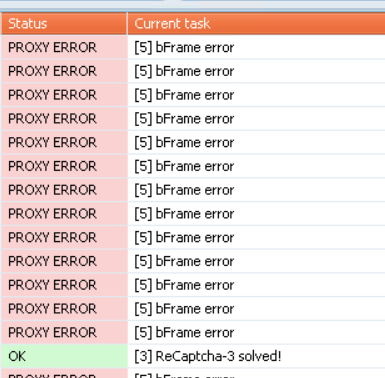

Anyway, everything is running and Xevil keeps throwing these errors and has quite a lot of fails:

And then here's the success rates so far:

I followed the guide in GSASERlists on how to setup Xevil.

Questions:

Why is Xevil throwing those errors?

What's the best way to track serps these days? I forgot the tool I used to use that had green arrows/red arrows etc when moving up or down - stupid I know but I really liked that for tracking serps.

How can I increase LPM? I use verified lists auto-syncing from dropbox, SER doesn't scrape or look for targets.

I'm currently getting 6LPM with 1 project.

Well, I'm glad to be back!

Happy to see it's quite active and still lots of valuable info here. Also happy to see @Sven constantly supportive too after all this time! Awesome stuff you do here, it's greatly appreciated.

It's been a long time since I last used anything to do with seo. I first bought GSA tools in 2013 and had massive success with it. Since I stopped around 2017 (I think) I have ran all kinds of PPC and still do.

Anyway, I finally stopped procrastinating and got everything I need (I think) so far to get the ball rolling. What I have bought/setup so far:

- I've started paying for GSASERlists

- I've started paying for catchall emails

- I use Solidseo VPS

- I paid for Xevil

- I added some money to OpenAI and get articles from there into SER

- I just paid for Spinrewriter and I'm now using their API to spin the AI articles.

- I've bought 30 proxies from buyproxies (20 for SER, 10 for Xevil) good idea or not?

- I got the MOZ chrome extension for PA/DA.

I made a random blog, just to test and play around with until I figure out what I want to focus on ranking.

I think I've covered everything above so far what I've done. Thanks to @backlinkaddict & @organiccastle

for replying to my thread when I came back, it seemed like information overload but now everything is launched/setup it's not so bad afterall!

Anyway, everything is running and Xevil keeps throwing these errors and has quite a lot of fails:

And then here's the success rates so far:

I followed the guide in GSASERlists on how to setup Xevil.

Questions:

Why is Xevil throwing those errors?

What's the best way to track serps these days? I forgot the tool I used to use that had green arrows/red arrows etc when moving up or down - stupid I know but I really liked that for tracking serps.

How can I increase LPM? I use verified lists auto-syncing from dropbox, SER doesn't scrape or look for targets.

I'm currently getting 6LPM with 1 project.

Well, I'm glad to be back!

Happy to see it's quite active and still lots of valuable info here. Also happy to see @Sven constantly supportive too after all this time! Awesome stuff you do here, it's greatly appreciated.

Comments

I will try that now with Xevil and just signed up to Serpcloud.

I left it blasting and just got online now the success rate is like this:

I'll try your suggestions and report back. Thanks!

I've started paying for GSASERlists

Don't ever pay for a list. They are all shit. Learn how to create your own list ( Not from Google, with Scrapebox)

I've started paying for catchall emails

It costs $12 to create unlimited catchall emails on Zoho. Learn that

I use Solidseo VPS

Ready-made Windows VPSs are overpriced. Learn how to install Windows on a Linux machine.

I paid for Xevil

Ok

I added some money to OpenAI and get articles from there into SER

Ok

I just paid for Spinrewriter and I'm now using their API to spin the AI articles.

Ok

I've bought 30 proxies from buyproxies (20 for SER, 10 for Xevil) good idea or not?

I got the MOZ chrome extension for PA/DA.

PA/DA is a shit metric, let alone MOZ. The truth is, you don't really need PA/DA data for the websites where you want to place backlinks. Supply is limited anyway.

I am using Xevil 5 as the primary captcha solver, capmonster.cloud as a backup and for hCaptchas.

Some sites have not implemented Captchas correctly, thus the errors you are seeing. Below a screenshot of the capmonster.cloud log.

VPS: Take a look at the Hetzner VPS where you can install Windows with minimal effort.

Spintax: If you own a license of Scrapebox and the Article Scraper plugin, you can generate Spintax with it through the OpenAI-API. This works very well also for non-English content.

Link Lists: You can scrape your own using the footprints in SER or - if you have Xrumer - extract footprints. @royalmice has a great instruction on how-to: https://asiavirtualsolutions.com/use-xrumer-to-find-foot-prints-for-gsa-search-engine-ranker/. Buying link lists seems to be a religion although these all overlap. Worst I have experienced is "SER Verified Lists" which does not even provide identified targets but pure rubbish.

Catchalls: I suggest you to try the IPv6 proxies in Xrumer first to see how this improves your efforts. I am using my own catchalls. It is some minutes of work to set up your own mail server on a small Linux VPS: https://www.linuxbabe.com/mail-server/postfixadmin-ubuntu

Metrics: Do these matter to Google? I doubt it. If you are really into metrics, https://seo-rank.my-addr.com/ gives you MOZ, Semrush and ahrefs figures at a low price.

Indexer: It is pointless to build links if these are not getting indexed at all. Consider an indexer at least for your T1 links to see results in organic traffic.

@organiccastle how to get this picture?I can't find it on GSA

I do have scrapebox, so I will look into re-learning how to get my own. I assumed these verified lists were better, but it's been a long time since I did any of this.

I'll look into Zoho!

For now, I'll just stick with the vps I got. I can think about changing or w.e in future.

Ok, thanks. I'll keep 30 proxies for SER only then. And look into getting ipv6 for Xevil!

So PA doesn't matter anymore when passing juice through dofollow links? What do you mean supply is limited?

Thanks @malcom!

Thanks for the reply! Your solve rates look good, definitely going to get ipv6 proxies. Ahh that makes sense with the errors.

I do own scrapebox, it didn't occur to me to use it to spin articles. Don't you think it's easier/more simplified just using OpenAI and letting SER spin them with my spinrewriter API? It's then all auto, especially in future if working with multiple projects.

I will setup my own catchalls then, makes sense. Do you see them burn out often/at all?

I have been running email marketing for the past 2-3 years, I can easy make catchalls but I assumed I'd be swtiching them out often so thought of using a service.

I did consider indexing services, I used to use them a lot, but I recently seen contradicting information on them so I thought I'd wait.

Thanks for your replies guys, I just dove in to ensure I get the ball rolling, now I can tweak/change and get closer to the perfect setup especially with your advice.

I asked this years ago, and the answer depends on a lot of variables.. But how long does it take you to rank these days? Picture your own strategies now, if implementing on a new site, what time scale do you expect to get yourself top 10. Too many variables I know, just a figure would help give me an idea on say low-med competition keywords.

Thanks!

Edit: nvm, right clicking done it!

Spintax: Spinrewriter is probably better (for English content). I don't have a subscription so I am happy to pay per use through Scrapebox / OpenAI.

Catchalls: I've ever experienced any to be blocked / blacklisted. It is a one time effort to set up the postfix thing and it just runs.

Indexing: I did wait, too. Too long. Used GSA Indexer plus various workarounds with sitemaps, more tiers, etc.. Now, I am happy to pay less than a cent to get my links indexed. Still running GSA Indexer and tiers though.

Results: I can see results within a week, 10 days for easy to medium keywords. Quite impressive. The content on your site makes a huge impact. GSA Keyword Research with its Article Writer is a beast to create & improve it. Take the suggestions + your original content, task OpenAI to improve it accordingly, give it a final touch and you'll rank way better and for so many more keywords. Yes, there is an additional cost for the license plus the APIs, but it will pay back in no time.

Yes not long after your post I bought them after trying multiple times (Russian/Rubles card not working etc) and it's running at 50 threads, although at the time I tested it was using just 12 or something. They seem much better though, thanks!

Yes I agree, for now anyway it's gonna help make that easier with spinrewriter.

That's great about catchalls, going to make my own now!

Less than a cent? That's awesome.

Yeah that's really impressive and happy to hear it! No special t1 stuff, just all through GSA and scraping your own links etc?

I'll definitely have to get the Keyword Research tool, does that also work better than Keyword Planner? (forgive me if that's stupid, like I said, it's been years!) haha

Thanks for your input, much appreciated!

So do you guys who use 5, just ignore those errors? Or scrape sites likely to not have v3? (above info is according to reproxy.network) I asked them because I thought it was proxy error.

So I started using reproxy.network, they advised the UKR ipv6 so that's what I'm using and check this:

No idea why/what's going on. I have these selected in cores:

Is this the issue?

So what's way are you uesd to index baklinks? I use Indexer and buliding tiers for projects. Google index is so slow.I indexed backlinks 18 days ago,only few backlinks were indexed.

how to find more high quality lists to post backlinks?

Thanks, I'll keep playing around with settings. I have it set as "ask all services to fill captchas". I'll try with the engines selected as organiccastle showed a screenshot of and see how that goes.

I will also try with XEvil first and CB second etc, like you say.. gotta find a sweet spot

I'm playing around for now, just blasting a blog I made getting used to the systems again.

I'll definitely allocate some time to learn scraping my own again, more relevant to niches etc.

I left it blasting since my last post and have results like this:

Maybe all I need is the extra neuronets, time will tell and when I finally get to a better % I'll update how.

Here's my settings for XEvil in SER:

I had retries at 2, just changed to 1.

Also, I moved XEvil above CB now, see how it goes.

Maybe it could also be the sitelists? The types of sites it's trying to solve captchas on?

This is an improvement which is good, but would love 60%+

Edit: I just noticed in my previous post, I have Recaptcha v3 selected. If reproxy.network support said I need XEvil 6 for that, should I untick Recaptcha v3 then?

I'll do that, reset stats and check again later.

My memory was vague, it was serprobot I loved because it tells you the first rank seen, then also it's best rank, and latest like this:

So it was great to see a new url pop in at 86 for example, and then see that it then changes to 12, if it moves back to 19, it will keep the best at 12 so we know the best position it's been at.

They have updated UI since I used it, but it's still the same so I'm happy

Had to check emails from 2013/2014

Probably H captcha has been the issue, and I had 50 threads too.

I'm using reproxy.network proxies which rotate and it looks like there is 999 ipv6 proxies in there for $5/mo.

Oh I see, yeah I visit the site for info but it's nostalgic to be using this and I like the info it provides and it's really cheap, like $5/mo for 75kw.

Hmm ok I'll keep that in mind, thanks.

I believe you can further optimize load on your machine by putting GSA 1st for image captchas. But since I don't have a license, this is just a guess.

Add a commercial captcha solver for the HCaptchas to get another 5% solved. @backlinkaddict is happy with 2captcha, I am with capmonster.cloud.

You have the tool at your hand. Consider the theoretical idea of testing the links in the lists with your competitor's sites. Obviously, you don't want their rankings to improve but just test the lists. So, take the content of your competitors websites as input and spread it to all the target sites the lists gives you. Google honors duplicate content a lot

I personally rely on the various filters within SER for my self-scraped lists as well as for bought lists. OBL, words, language, countries, etc. for T1 links, less restrictive settings for T2 up. DA, DR, spam score are irrelevant to me, only rankings and thus traffic matters.

It seems they have also changed their IPv4 setup recently. I am using these for GSA Website Contact now and can't complain about anything but the proxy speed.

For scraping, I am using webshare proxies with the Google-option, for SER submissions cheaper webshare proxies without that option but more traffic volume.