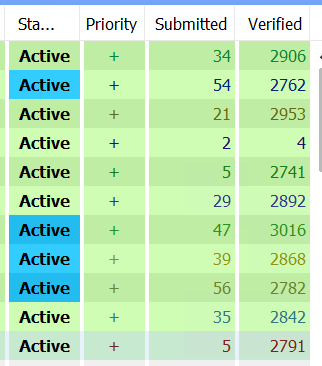

Low LPM

Currrently i have this proble of low submission. I am using verified list. Proxy from storm proxies and myprivateproxy, email from catchallboxes.com captcha using XEVIL. It has been very slow the last few days. What might seem to be the problem? Having this problem for a week now after some new update.

Comments

1. May i also know about the captcha and the proxy you are using?

2. My proxy providers said(storm proxies) i can not use more than 10 thread or they are going to ban me since i was using their rotating proxy, if you have any better recommendation please do share some of your providers. So that's why i use low thread count for my project. I run 10 project at the same time currently.

It would be great if at least I have above 100 LPM

Once again am thankful for your advice

Captcha

For captcha I have different set ups running on different machines.

1st Set Up: The best one is using gsa captcha breaker and xevil with V2/V3 solving. That install generates the most links and using both captcha solving tools together will allow you to make links on a lot more sites. Most list sellers use both software to build their lists. Serpgrow definitely does. Each tool is good at solving different types of captchas so worth having both.

2nd Set Up: On the other machines I'm using just GSA captcha breaker and xevil and have disabled v2/v3 solving. Slightly less sites but things run a lot faster when v2/v3 is disabled. Plus I don't have to pay the extra for xevil proxies to solve the v2/v3.

3rd Set up: No captcha solving. Far less sites that get verified links but the vpm is pretty nuts on the vps i'm using.

I still see ranking increases, but that's due to the list I've built for myself and the strategy I use. Even without captcha solving, I can still build links on a few thousand sites if you include blog comments and url shorteners. If you include indexers, then this install can make links on over 80k domains, with no captcha solving lol That's plenty to be able to rank with.

So up to you. All 3 set ups work great, but the 1st is the most expensive as you need 2 sets of proxies, one for gsa and one for xevil.

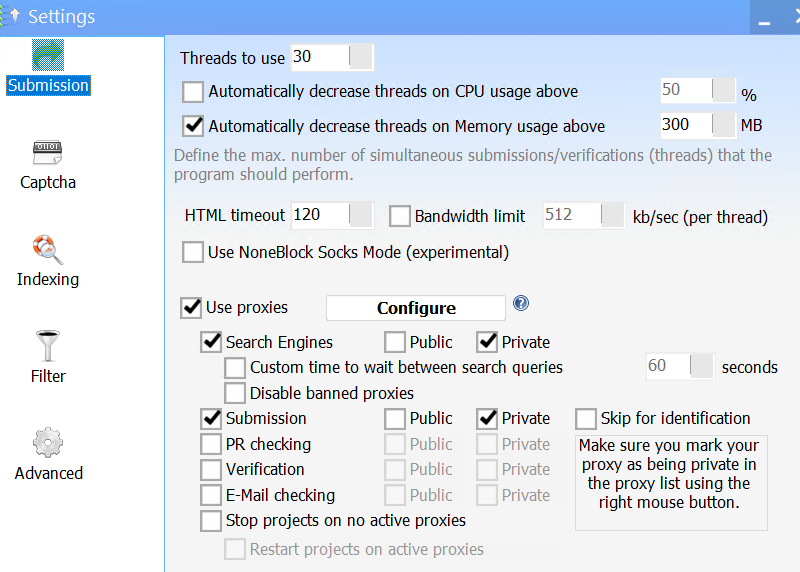

Proxies

Definitely need to be using dedicated proxies here. Avoid public proxies and avoid shared proxies if you want to max out the speed. Storm proxies for gsa link submision/verification is a big no no. I recently started using 10gbps private proxies from here: https://www.leafproxies.com/collections/monthly-datacenter-proxies/products/october-monthly-uk-proxies-10gbps (update: don't use these proxies, they'll ban you, they are not seo friendly)

The vps I have is in helsinki, so UK proxies still run pretty fast for me. At the very least you need to be using 1gbps private proxies if you want to see an improvement in your vpm, along with a vps that has a 1gbps connection.

I've got 35 proxies in total and they're running in 39 different vps, each at 300 threads. That's about 12,000 threads in total being run through 35 dedicated proxies. Speeds are amazingly fast. Proxies are $2 each, so it's not expensive. As they are 10gbps proxies, I can run 10 times as many threads through them compared to my previous supplier which had 1gbps speeds. Plus it's unlimited - run as many threads as your vps can handle.

Storm proxies is only good for scraping non-google search engines and also for ctr direct traffic bots.

Once you've built your verified list and changed your proxies to dedicated, you'll notice a massive improvement in vpm/lpm. At 300 threads you should be hitting between 200 and 400 lpm/vpm easily.

https://reproxy.network/#prices

Best prices I've found with excellent solve rates.

I can't speak for seremails, I don't use their service. I use my own private catchall emails set up on vps from solidseovps. I have a lot of installs running with a lot of projects, so the server receives a lot of connections simultaneously and starts to reject my connections.

It depends on how fast you run it, how many projects you have and how many installs you're running. If you run just one install with 10 projects you probably won't notice an issue. But if you run 6000 projects simultaneously, then you will definitely start to run into some issues and will need to use proxies for email verification along with additonal delays between each connection per email account.

How many proxies do you need per xevil threads to prevent them from getting banned?

With the 'slightly less sites' using setup2 vs setup1...is it that much faster to run setup2 that its building more overall links per day vs setup1? I'm also wondering if some/most of the sites using the recapv2/3 would be overall higher quality and worth the extra time and cost to get a link from?

I'm getting hardly any bans. They charge by how many solving threads you'll use. 50 threads is plenty for one gsa install, but this may depend on how many v2/3 sites are in your list.

With regards to set up 2 Vs set up 1, yes, you're absolutely right. Definitely worth getting links on more sites and using v2/v3, especially in the tier 1. The proxies only cost 5 bucks for ipv6 for 50 solving threads.

Set up 1 is what I'll be using for my own business. Although my tests with the no captcha site list is still moving rankings up, so in my case, it's actually not necessary to use any captcha solving at all. Also when I see 500+ vpm with no captcha solving, I can finish 3 days of campaigns in literally 1 day. The impact to rankings when you can build links that quickly is quite phenomenal. Hence why I prefer speed to actual site numbers, given the choice.

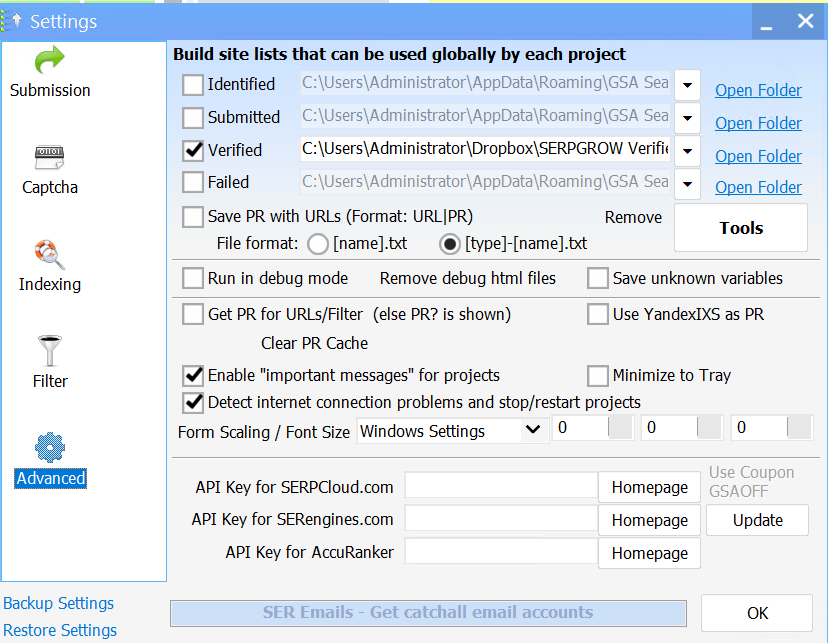

How do you scrape google with just 35 dedi proxy?

Those installs I have are just doing link building. For scraping new sites, I use hrefer on separate machines with either public proxies and Storm Proxies scraping non google search engines. This syncs to a folder which then gets processed by Platform Identifier and creates an identified list for me automatically. This identified list then gets processed with one project on one of my GSA installs. It's all automated and this adds sites to my working verified list. The verified list is also synced to all my installs and all servers build new links from this verfiied list.

I prefer to use GSA SER for what it's really good at, which is building links at speed. If you do both link building and scraping at the same time inside GSA SER, then you will notice your vpm suffer considerably, as you are using it's resources to scrape sites, then test sites, most of which won't be working sites. While your GSA is scraping and testing, mine are just building links 24/7 from a pre-filtered and tested working verified list. That's why my vpm is usually very high.

Hence why I keep the 2 processes separate and even use different tools to complete the scraping job.

Best to just test for yourself and see what works for you and your set up.

I am using proxies from webshare they are very cheap and almost all work all the time>

I have also tried proxies from Instant proxy but result are same

My LPM is very low. like 5- 20 lpm when I run gsa on 800 threads with 90 proxies

I am not using url shortness because they are useless

I got my list from www.serverifiedlists.com

1. Do you have any solution on how to increase lpm?

these are the engines I use

My setup ====>

Xevil +gsa

system- ryzen 7 - 4.5ghz

32gb ram running at 3800mhz

nvme ssd ( super fast)

RTX 3060

I'm guessing you are running all your projects on that purchased list? If you make a clean verified list first, it will run a lot faster.

yes, all run on the same purchased list

How do i make a clean verified list?

I explained it here.

also is there a place for a good list? to be honest all seem pretty trash.

so should I use url shortners and microblog on tier 1?

I cant use blog comments on tier1 ?

For tier 1 I literally use any link that is dofollow and has keyword as anchor text. If you build a 3 tier structure with enough T2 and T3 links, you will rank for every keyword used in T1 link. Even those exploit links are a hidden ranking gem that no one really knows about. Probably because they're too scared to use it on their money site lol

Url shorteners are great for tiers, especially if you just use the dofollow ones. I've used it to money site as tier 1, but as there is no keyword anchor text, it doesn't directly influence rankings for a particular keyword, as there is no anchor text in the T1 link. However, it will boost the page authority of any page it's pointed at, which will help boost rankings for any keywords that appear on your landing page. It's like "indirect" SEO.

There are plenty of other beneifts, explained already. I'd do it once to all money site urls as part of a 3 tier campaign, just to get the boost in DA. But to actually move keywords up the rankings, the above engine selections is what I'd recommend as the majority of link sources are do follow with keywords as anchor text.

Microblog I use because there is one site in my list with very good DA. No big deal if you use these or not. Depends on what you have in your site list.

Blog comments can be very good for tier 1 as there are literally millions of them so it's an easy way to boost your DA. Links from unique domains that are do follow contribute to boosting a sites DA. I filter the obls on projects to have max 100 obls. This filters out the heavily spammed sites with high obls. I've seen plenty of sites with 10,000+ obls lol These sites you want to avoid using as there is literally no SEO value when the obls are that high. Only place for these types of links is to be pointed at links that need indexing.

You can use gsaserlist.one for verified list. It's very useful.

I am using webshare proxy ( 400 proxies) and they are working for as of now because i run 2000 threads and it can be expensive if i buy 200 proxy per $1 each

getting 140lpm now on this 2000 threads, I'm sure it can get higher if I get a better list. I'm using serverifiedlists right now

after i updated my list my lpm increase a lot

1. When I use shortners it feels like almost all backlinks which are created just url shortners now

I just spent so many hours creating 8 campaigns ( 5 tiers each) for my website, lets see how fast it ranks now I will be updating here!

I'm really confused on what to use for engines because don't want to harm my sites

If anyone genuinely knows a good list then please lmk, no referrals or all that LOLLL

I am using these for now I selected the using the option when i right right on the engine bar

" uncheck engines that use no contextual links"