Upper tier issue

VMart

Natural SEO

VMart

Natural SEO

I'm running upper tier above PR6. 10 Dedicated private proxy, But GSA ser extracting repeated links. Kindly advice me what is my mistake.

Comments

Please give me instruction to solve the issue..

I have not set any schedule.

fix it by checking the identified lists only, (if you're using search engines to find targets).

Do this until you have built a big enough list to be able to use your verified list efficiently.

You may also want to change the timing settings for multiple posts, 2 hours is quite a long time to wait before another registration/post, you're practically waiting a total of 4 hours to obtain 2 posts from 1 target.

Tim89In Advanced settings I have unmarked the "Identified" the options but can I check the "Identified" in Options/Use URLs from global site lists if enabled settings

then turn that option on again.

It makes no sense to me, why don't people just listen?

Why have you unmarked the identified list in the advanced options? So where are the identified URLs being saved? Mate, you've got your settings all messed up, that's the reason your not building any links, check to save your identified list in the advanced options firstly, at least when SER is searching for target URLs, it will have somewhere to store them ROFL, then go into your campaign and uncheck verified and check IDENTIFIED and see what happens.

SER is repeating the same links because it has no other targets to hit and do you know why that is? Do you understand?

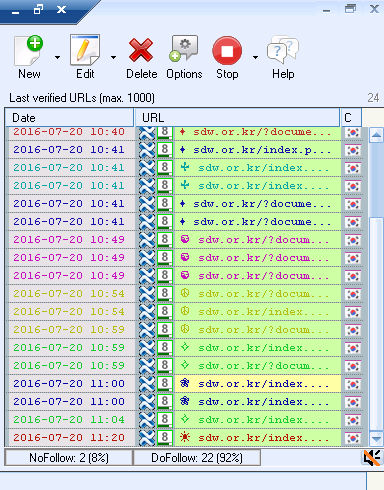

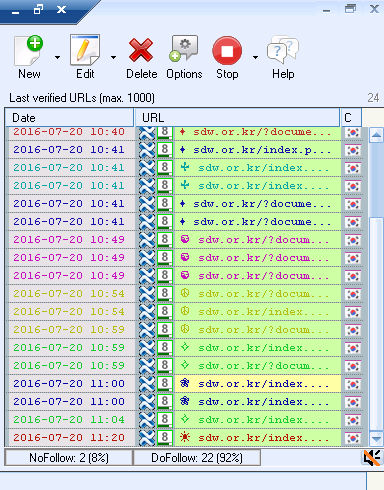

problem is coming again...

Sven you have mention this option "reverify existing verified urls"

every 1440" .Is it correct or not?

you have mention this portion only...I have done like this...

at last I have clear "Delete unused accounts" the following message are coming

In advanced option i have select Identified option but there is no

submit is happening..

20 Projects (Tier 1) only

10 Private dedicated proxy (buyproxies.org)

Every Project has niche related keywords above 700

30 Rediff mails (tested)

Use URL variations with 20 (Ticked)

Use the root/main URL with 30 (Ticked)

Above 700 Niche related keywords

Collect keywords from target sites (ticked)

Use collected keywords to find new target sites (ticked)

Put keywords in quotes when used in search queries (ticked)

Try searching with similar looking keywords (ticked)

Tier 1

Engine Article/Document Sharing/Social Network/Web2.0/Wiki

Filter URLs

Skip sites with more than 25

Skip sites with a PR below 3

Skip also unkown PR

GSA Captcha breaker

3 days but only 8 submission is done..why it is like this.?

In my side is there is any problem..please tell me

Well now, you just need to find targets to post to... add as many keywords that are related to your site so SER can scrape potential targets, because you have set your PR settings and filter settings to anal amounts, you're not going to be getting an abundance of links like that, so as I said, get yourself more than 5 proxies as I see in your screenshot you only have 5 proxies, add more keywords and perhaps think about lowering your link settings (requirements) if you want more links.

At the end of the day, everyone should want to be building a massive verified list, with this list, you'll be able to create links whenever you want, no phaffing around.

An identified link, or a search engine scraped target is not a 100% target, it has been picked up by footprints, it doesn't mean SER will definitely be able to make a backlink from the target whereas a verified link is a target where you know 100% you can get another link from it over and over again, until the domain goes down or they change their platform or tweak to prevent spam.

If you're not saving your verified links then you're wasting alot of effort in finding new targets..

If you're wanting a project to find new targets and only post to those new potential targets then you should have "identified" selected alongside your search engines and leave the "verified" list unchecked, then you'll want to go to the SER advanced options and reconfigure the site list structure to how it was originally, Identified saved to Identified, Verified > Verified, once this is done at least once you've found a target > saves into identified list > makes a back link > saves the back link into the verified list but I thought this was common knowledge

When SER identifies a link when searching the search engines with keywords, it stores the identified targets in the identified site lists lol I thought that was self explanatory to be honest

I'm happy about your good reply and suggestion.