VERIFICATION SUCCESS RATE [need help]

need help please,

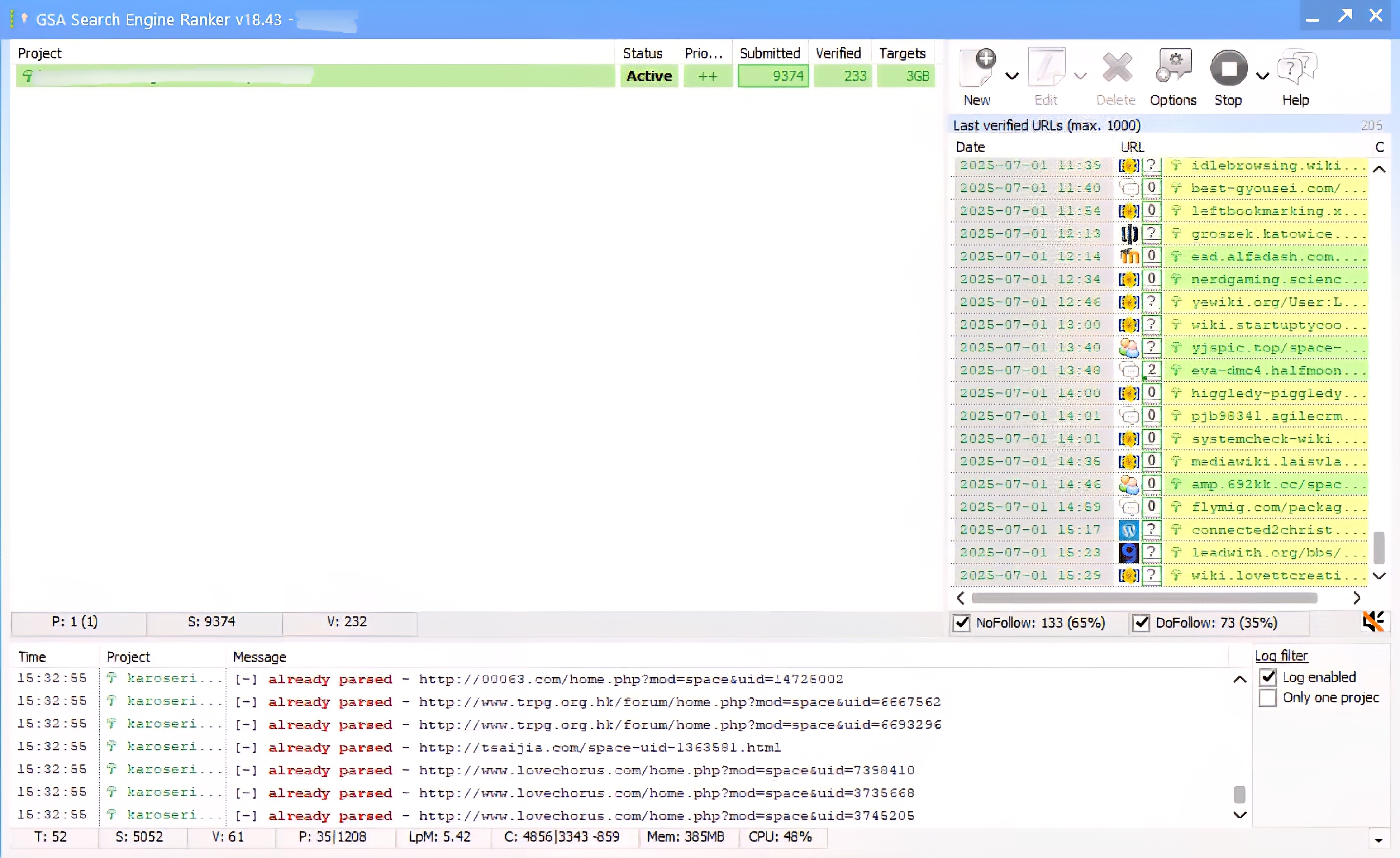

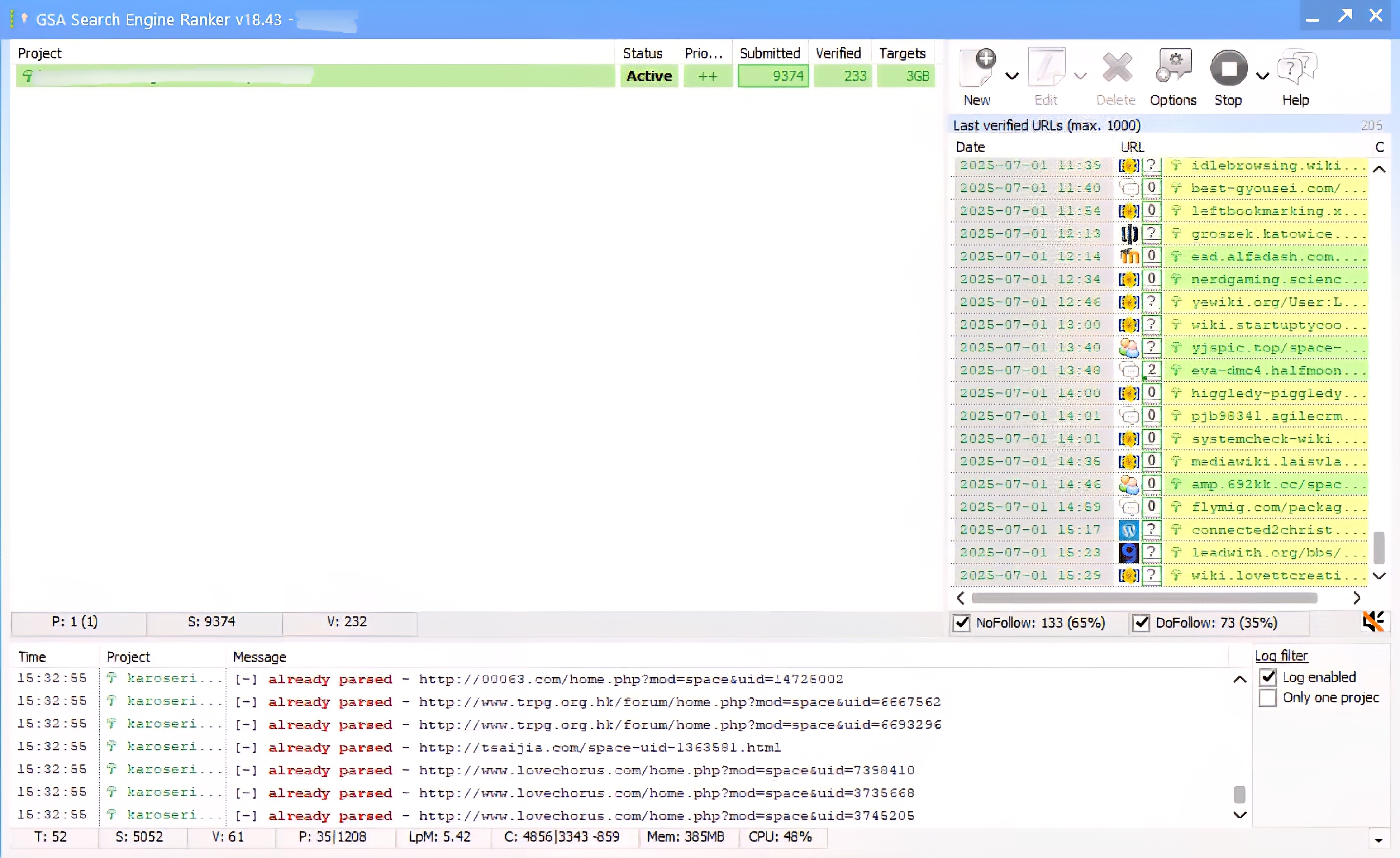

I have been running this campaign for 1 week, but why is it that out of 10,000 to 30,000 submitted, only 250 are verified?

I have made sure to use a private proxy ipv4, I also use 0captcha and XEVIL services, for email I use personal hosting, there are approximately 200 articles with spintax format, my verification settings are set every 3 hours

fyi: the campaign I run all engines, except Redirect, Short Url Pingback

I have been running this campaign for 1 week, but why is it that out of 10,000 to 30,000 submitted, only 250 are verified?

I have made sure to use a private proxy ipv4, I also use 0captcha and XEVIL services, for email I use personal hosting, there are approximately 200 articles with spintax format, my verification settings are set every 3 hours

fyi: the campaign I run all engines, except Redirect, Short Url Pingback

Comments

You've got a lot of "already parsed" messages in the logs - this means your site list contains duplicates.

It does not look like a clean verified list with duplicates removed. The rate of new links being verified is way too slow. So yes, it does look like you've wasted your money on this list.

A good verified site list should be able to make atleast 100 new verified links per minute. (Contextuals and profiles at 300 threads).

With other settings configured such as scheduled reposting, my servers can go as fast as 400-500 verified links per minute. Which equates to over 500,000 links per day per server.

That is what the software is capable of if you feed it a good verified list.

what factors/variables determine such good results?

I have tried to maximize my campaign by using good articles, ipv4 proxy, 3 layers of captcha (gsa captcha breaker, XEVIL, 0captcha), email using my own hosting so what else needs to be maximized?

You just need need more working link sources. Buying site lists from different list sellers is one way to do this. The better way is to scrape them yourself, test them and build your own working verified list. You will find sites that most list sellers won't be selling in their lists.

If you want to get even more link sources, then take a look at the new sernuke engines: https://forum.gsa-online.de/discussion/33465/sernuke-custom-gsa-ser-engines I've added a few thousand new sites to my site list from just these engines alone and I'm still scraping and finding more everyday.

GSA SER High VPM.mp4 That's one of my installs running close to 400 vpm using a list of around 6000 domains. Scheduled posting enabled - runs quicker by reposting to same accounts.

For scraping new sites I've buit my own bots with zennoposter that scrape Google, duck duck go, aol, ecosia, brave, yandex and seznam search engines. There are many more search engines out there that I can scrape. I'm only running 30 bots that target a small group of engines.

This is where your biggest opportunity is right now. You need a good scraping solution and also need more engines that you can scrape sites for. The more sites you have in your list, the easier it will be to rank when you start building your tiers with them.

For site lists, this is probably one of the better ones out there: https://gsaserlists.com/ They also include sites for the new sernuke engines. Their verified list does contain working sites, so you should see an increase in speed when using it.

because of listening to your advice I just decided to buy GSA SER Link Lists

the question is, do I have to use sernuke or not?

Personally I have high resource needs as I have multiple installs running software at high threads and most proxy providers will limit your threads and bandwidth. The only provider I found that has truly unlimited threads/bandwidth is privateproxy.me. I use their static datacenter proxies and got them at 50% off with code: 50OFF. Their starter package with 13 proxies is more than enough for running 1 gsa. Proxies are fast.

For RDP I'm using Terminals which is free.

Once you have the licenses from sernuke, you'll be able to build links on a lot more sites than just using the default engines. Links from more unique domains will lead to higher authority for your money sites if you power up the T1's with tiered links.

The more sites you can build links on, the easier it will be to rank your projects. Of course, you will still need to get your links indexed in Google to see results in rankings. But that's another challenge.

can the backlink creation process run? and can increase my VPM from before

The gsa ser list should give you much better VPM than your previous list as it contains working sites - dead sites are frequently removed from their list and new sites are added automatically.

To optimise things further, you should clean that site list first and remove all non working sites. Once you've done that, things should run a lot faster. Although, if you are only getting 800+ links (including blog comments) from that site list, it hardly seems worth bothering!

Also, blog comments use a lot of cpu and runs the slowest out of all the link sources. I wouldn't expect things to run fast when you have blog comment engines enabled.

You really need to learn how to scrape and test sites so that you can build your own site list. Until you do that, you'll never tap into the true potential of the software.

It looks like you are runing T2 campaigns, so that's a good time to be using re-posting options so that the software creates multiple accounts and posts from the same site list.

That's one example, but you may have to tweak settings. Enabling the "per url" option will have the effect of re-using the same site list and point each site at each T1. So it will make a lot more links a lot quicker.

The software is capable of making 800 links in a few minutes - it shouldn't take 1 week to make 800 links.

Scrape blog comments and guestbooks. Use GSA PI to identify targets and clean up the list. GSA PI also has a feature to extract external links. You can also use scrapebox to crawl all internal links of the websites then extract the external links. Do one of the two.

No one is doing anything like that anymore. To be fair though, it's harder these days to build a decent list with just the default engines. Especially do follow contextuals to use as T1 link sources. Gnu board was the last cms that was great for this - but over the last couple of years the working site numbers have been dropping pretty quick.

Which is why it's unlikely you will find any list seller that offers a list with thousands of working sites. You'll be lucky to get a few hundred contextual sites and even then maybe 80% of them will be no follow. No good for T1.

Without the sernuke engines I was very close to abandoning the software, as the majority of my targets have been blog comments, guestbooks, redirects and indexers. None of these are any good for T1.

I've been building my PBN network for the last 3 years and that's the route I'm likely to continue with. These automated tools like gsa ser and rankerx are better for T2/3. They can't be sustained as a T1 link source.

Hello everyone,

Since this topic is about GSASERLists.com, I’d like to explain that my lists go through a live algorithm to ensure all links are active and match the engines. I'm not using GSA Platform Identifier for this process, unlike most other GSA link list providers. This means I can confidently say that most of the links are active, and any non-working ones are automatically removed throughout the day. A link may be re-added to the lists if the algorithm detects it has come back online. To verify this, I checked the verified link list, which includes around 757K targets, using Scrapebox’s Alive Check add-on. I’ve uploaded a video of the results in this comment. Even the few URLs marked as "Not Alive" were actually live, likely because I didn't use proxies during the check or due to the size of the webpages.

TimothyG said: It could be an issue with his set up such as the emails or even proxies. But I'll test it and see.

No worries about the cost - it's a legitimate business expense, so it will offset my tax bill.

Just waiting to recieve the list via dropbox.

I don't use such things to build and check my verified lists. I use GSA SER to do this. I suspect every user that purchases your list will also do the same. This is the only way to ensure that a site works and results in a verified link.

A site may still be live but have registration disabled or sending of emails disabled - only GSA SER can check if a site still yields a verified link - scrapebox and a custom algorithm can not do this.

That's exactly the point! The targets must be recognized and processed as such by GSA SER.

I also gain different results with other tools but that's not the point when talking about SER link lists.

You mentioned the sernuke engines positively. These are up-to-date and perfectly optimized. Many of the standard SER engines are no longer up to date and so targets are just left out, no matter what other tools report.

I'm sorry, but this isn't possible as I'm strictly trying to keep the lists limited to a small number of buyers. Currently, the monthly subscription is also sold out, with 60 members already joined. I've already granted access to @sickseo and will consider his feedback to implement better automated approaches.

I also don't use Scrapebox to check the lists. Everything is written in Python, and the algorithm follows the engines. That said, you're right. Although each target has gone through GSA SER, later checks can only verify if the target is GSA SER-friendly. They can't determine if registration is still possible without further checking, which is time and resource-consuming. So, I'm leaving those targets for GSA SER.

I digress, and will wait for the analysis by @sickseo

have a good one

The new sernuke engines combined have so far yielded over 2000 verified sites and increasing. The gitea site list alone has 3592 sites, so I'll see how many of those are still working. These projects have only been running for a couple of hours, so still early days.

What I did find amusing is that the vast majority of the links in the gitea site list were made by me as I can see my keywords/brand names in the url from my client projects and personal projects. lol

It just goes to show that the sernuke engines are good link sources with good indexing rates as my backlinks have been scraped from Google by someone else and compiled into this site list.

@Alitab First impressions - I noticed no mediawiki sites at all in the list - My scraped list has a few hundred media wiki sites, so they are out there. Although 99% of them are no follow, still good to have as last tier to point at things that need crawling/indexing. Wiki links also have good indexing rates. I've even got .edu wikis with metrics as high as PR9 in my list.

Also looks like you've not got any sites for the latest sernuke engine real estate package - would be nice to see what sites you can get for those engines. Hope you can get those added soon.

Issues that I can see already - As an example, the wordpress article site list is showing 1314 sites! It would be amazing if that were true and I made 1314 verified links - that's a PBN network right there.

But I suspect many of the sites no longer work and your current system isn't set up to remove the non-working sites. As a result your site numbers just keep increasing as the method you currently use to check working sites isn't repeated through gsa ser to recheck it can still make a verified link weeks or months later. That's what's causing the projects to have high submitted numbers - these links will likely never be verified.

@TimothyG There is definitely something wrong with your set up. I've already exceeded your total verified numbers within a few hours of testing the list - projects are still running and verified numbers are still increasing on my test projects. Your proxies and emails would be the first thing to check/replace. Otherwise I'm using xevil with recaptcha, hcaptcha and deepseek for text captcha, which I'm guessing is the same as your set up?

@the_other_dude I'll publish my final numbers once the list has finished processing. For users that don't have their own scraping set up, I think it's a pretty good list - just for the sernuke sites alone, I think it's worth it. We both know how long it takes to scrape and build a list for all the ser nuke engines - that's a lot of scraping bots. There are new sites in the list coming through that I don't have yet. Maybe I would have found them eventually - who knows.

However for a user that hasn't invested into the sernuke engines, the T1 link sources such as article, forums, social network and wiki link sources are pretty low. Although this is more likely to do with the engines themsleves and the lack of sites available for them.

We all have our different methods for scraping. Even a modified footprint or custom footprint can lead to different sites being found as well using them on different search engines. If we all used the same default footprints and scraped the same search engines - all of our lists would be identical.

I'll wait till the list has completely finished processing before I draw any final conclusions.