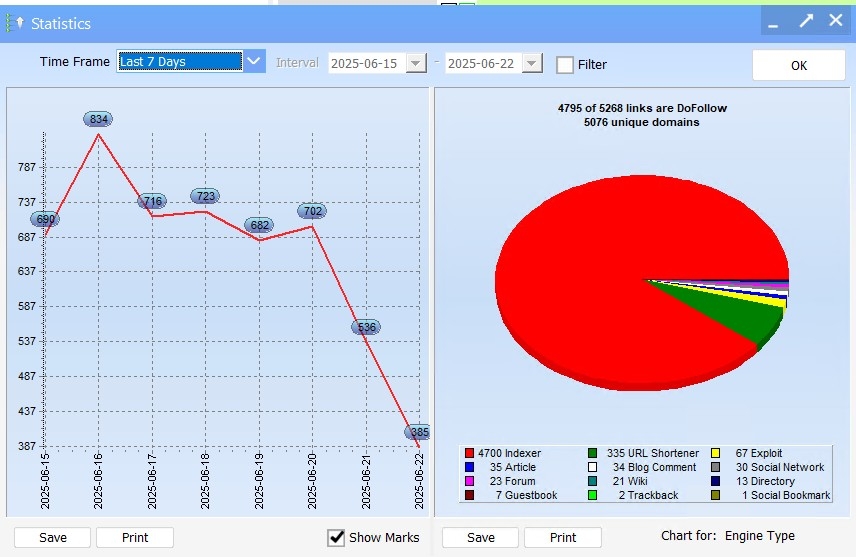

Hi, most of the links my GSA SER has built are from indexing services (I do have SER linked with GSA SEO Indexer). This can't be healthy, right? How do I have a more evenly distributed profile where the other backlink types get more links percentage-wise?

Comments

If you want more of the other types such as articles, forums, social network and wiki links, (best for T1 and T2) you have to scrape for them, then test them so that they get added to your site list and used on future projects.

A question: Should I uncheck the indexer option in GSA SER? Of course, I am still use GSA Indexer to index the backlinks built here (but those are Tier 3's right? GSA Indexer backlinks to GSA SER backlinks to "natural" backlinks to my money site).

1) What some good vendors for these site lists? Recommendations and links would be totally appreciated!

2) Would using site lists from vendors cause any issues since everyone will be using the same site lists and therefore the same URL's for backlinks? (Sorry if I sound ridiculous. I'm totally new to this.)

If you are serious about getting results then you really should look into setting up a dedicated scraping system for all the major search engines. This is how you tap into the real ranking power of the software. You'll never achieve that through paid site lists.

- Using lists shared by hundreds of other users has much less value as they are flooded with posts and external links. They are literally leaking link juice.

- Sites with hundreds of thousands of low quality posts on them make getting new posts indexed that much harder. The site has already been flagged as low quality by Google. Your single high quality post won't change this.

- Plus the site numbers per engine from list providers is embarassingly low. I'd be embarased to sell those site lists.

Those new sernuke engines are the best link sources to use for any tier. I'm using 3 tiers of them and get very good natural indexing on them - no indexing service - just tiers.

As for the tiered structure, lots of options here but currently running with the following structure:

A single T1 project powered by 5 T2 projects and each of those are powered by an additonal T3 project. This is a 1-5-5 project. You can go bigger for more competitive projects and use even a 1-10-100 which I used to run in the past, but you'll probably need 1 server to run something that big continuously for several months.

Also have the tiered option set to only build links to do follow links. Links are reverified automatically every 3 days to remove dead links. The projects are using do follow and no follow link sources across all 3 tiers. Each T2/3 project can easily go as high as 200,000 links so it's quite a big structure that's being built.

It's a very nice automated way to build the tiers. The authority of the link sources are quite varied. Although GSA shows PR0 on the vast majority of sites, there are still a fair amount of PR1-PR8 link sources in there too. This is quite meaningless though as when checking DR and DA stats, the metrics are varied again, some have DR and no DA, and vice versa.

I have other strategies in play that boost the authority on all the link sources anyway, so using a DA0 site today could well become a DA30+ site in the future once the tiers have been built. It takes several months running this project before you start seeing any impact on rankings.

Thanks for this detailed explanation and thanks for all your contributions to this forum

Why do you have 5 T2 projects when you could just run one T2 project with the same amount of articles that is within the 5 T2 projects?

Is there any advantage to having more than one link from the same domain?

The goal is to use unique domains pointing at tiers below.

But if a domain is used more than once to point to the same link in the tier below - only the keyword anchors will have benefit as it will send a signal for different keywords/branding anchors - but you won't gain any extra authority as the domain has already been used once.

1) When you have 5 Tier 2 projects, how much do you differentiate the Settings (i.e., the "Where to Submit" fill-ins)? If I were to do multiple Tier 2 projects, I don't want SER to be searching for and posting to the same links across all 5. How do I prevent this?

2) You mentioned SERNuke's engines. I took your advice and looked at them. Did you buy all the engines or only a couple? Which engine would you recommend for sites that review products on Amazon?

3) Your Tier 3 projects build far more links than Tier 2 and especially 1, correct? The only way I know how to do this right now (remember, I'm a beginner) is to click on Indexer services, which I'd imagine you're not doing. How do you have Tier 3 have a lot more links than Tier 2? (I don't build Tier 1 links correctly; I just boost existing "natural" Tier 1 backlinks).

Any help would be appreciated! You're an absolutely GSA SER guru!

The traditional set up is to use T3 (last tier) projects with engines like blog comments, guestbooks, indexers, redirects and even wikis. These engines tend to be good for getting links in T2 crawled which could lead to indexing. These engines should never be placed in the middle of your tiered structure as they will have either high obls (blog comments/guestbooks) or be mostly no follow (blog comments/wikis). No good for passing link juice.

The redirect and indexer links will be mixed no follow and do follow with low obls so could be placed in the middle tier, but their crawling/indexing rates are incredibly low with google. They did a spam update last year to combat these types of links being indexed.

In my tests these link types are mostly ignored by Google - hence why I don't use them.

If you don't want the software to be posting to the same sites repeatedly, then disable reposting of links.

You'll need to test this as you won't get many links if each project uses each site only once. If you only have 100 sites in your site list, then you will only get 100 links created per project.

2. For amazon product review pages, I'd suggest targetting brand names and product names variations - these will usually have lower competition and be easier to rank for.

The best engines for contextual do follows are the real estate and job packages. The bio links package is ok for this too. These allow for keyword anchors to be placed within the content - the most effective type of link for rankings. Other built in engines are also good for this, such as moodle, wordpress, smf, xpress article, dwqa, gnuboard - although the sites will be mixed do follow/no follow.

The git a likes package will be best in terms of link numbers - gitea has thousands of working sites. But the do follow links are profile url anchors, not keyword anchors. The wiki links it makes are no follow links - so no good for pushing link juice through them.

I've bought all of the engines. My strategy is based on acquiring links from as many unique domains as possible. My site list for ser nuke engines has over 4000 sites in it.

3. Think pyramids when building 3 tiers. As you have T1 links already made, you only need to build T2/3 projects to them. A good strategy would be to use contextuals/profiles on T2 and use the junk links on T3 - blog comments, guestbooks, redirects, indexers, as well as wikis. Also make sure on your T3 project you set up the tier settings and enable "do follow" links only. This set up is designed to push link juice to your T1's.

That's 1 way. Or you could use contextuals and profiles across both T2/3 and avoid the junk links - this is what I do as these link sources are mainly content based and will have better indexing rates than using the junk links.

All I can say is play with the software and run different tests. There are lots of strategies that can be deployed once you undersatnd the basics.

To control the amount of links made in each tier - there are 2 ways to do this. Either duplicate a project x amount of times to have more links made in that tier. Or use the reposting settings to have a project make more accounts and more posts. But make sure you use delay settings.

Is there a reason why you don't use Pingbacks & Trackbacks on T3?

These days I'm more focused on higher quality link sources - ones that are content based with low obls with good indexing rates.

For T3, the lower quality link sources have traditionally been the way to go. But I'm using contextuals and profiles on T3 instead which is giving me better results.

So are you saying the contextuals and profiles you use on T3 all get indexed naturally? Is it just MediaWiki links that index naturally?

The new engines work like this right now. But once those sites have been spammed to death I'd expect things to change.