Huge Difference Between GSA SER and Platform Identifier - Need Advice

Hey guys

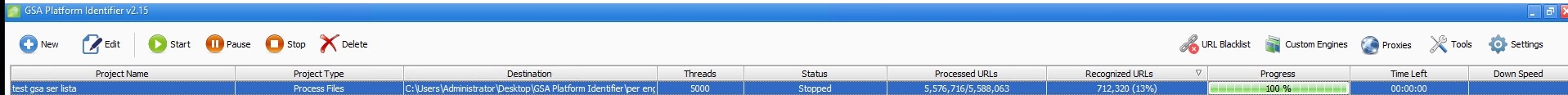

I have a site list that I’ve processed in both GSA SER and Platform Identifier. With GSA SER, I got close to 3 million identified, while with Platform Identifier, I only got 712k.

The thing is, GSA SER takes me 7–10 days to process this list, while Platform Identifier usually takes 1–2 days with 5000 threads. This is really driving me mad because I can’t wait 10 days just to sort a list that's why I bought PI.

My question is: why is there such a huge difference? I would expect the results to be at least close, not off by millions. Which one should I trust more?

Any insights would be appreciated.

Comments

I just tested GSA Platform Identifier again using the same settings as above, this time with 1000 threads, and the results are only slightly higher — from around 13% to 16% identified.

However, I noticed something strange:

The status bar says 100% finished, but PI keeps running for 5+ hours without actually processing any new URLs. It just sits there. Is this a bug?

At this point, I’m not sure how to proceed anymore.

I can’t wait for SER to process lists for 7–10 days, but PI gives far fewer results. And I really don’t want to import the raw list directly into SER and waste time/resources processing engines it can’t even post to.

But right now it feels like I have no choice because PI isn’t finishing properly and SER takes way too long.

Any advice on how to handle this would be appreciated.

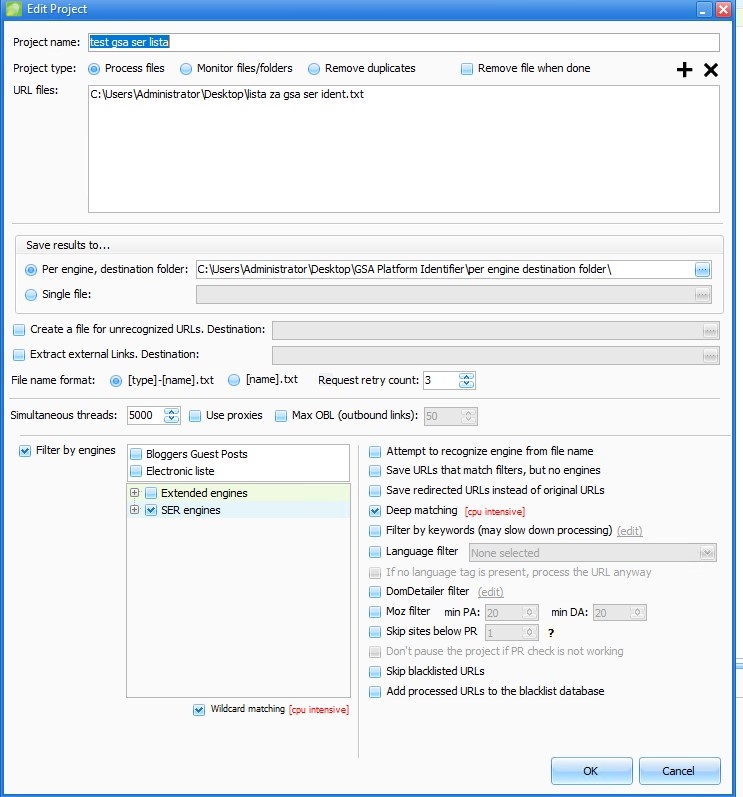

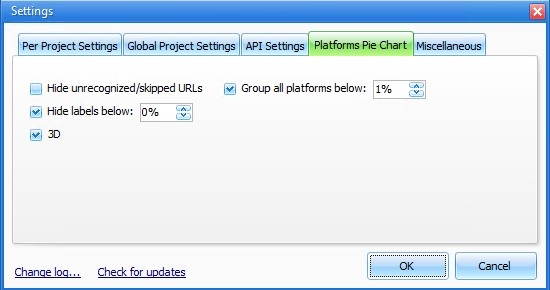

Can you try with the settings below, with 5000 or even 1000 - You are just causing bottlenecks.

Also, make sure to export the engines from GSA SER and paste them into the engines folder of GSA PI - overwriting existing. GSA SER engines are updated more often than GSA PI, and if you use SERNUKE, their engines are not in GSA PI by default. You will find the export in GSA SER under the Advance Tab \ Misc. (See screenshot below.)

i was just re reading a related post and @sickseo was saying he uses it, and it works, for this purpose unless im misunderstanding one or both of you? https://forum.gsa-online.de/discussion/comment/196849/#Comment_196849

My Test Results

I ran a similar set of tests on the same list and here’s what I got:

From what I can see, lowering the thread count improves stability and parsing accuracy, but the trade‑off is speed. I also noticed that enabling the bandwidth limiter probably held back a few extra % points without it, I might have squeezed out a bit more.

Still, the numbers are far below what I used to get with GSA SER (2.5M identified) and GSA Platform Identifier (1.1M identified). I’ll try again by processing an export from GSA SER as @royalmice suggested, and see if that improves the results.

Some additional tips

Got it, thanks sir, appreciate it!

When working with raw URL lists, I’m wondering about the best approach to duplicate removal.

Wouldn’t it be better at the raw processing stage to remove duplicates on the domain level instead of just the URL level? That way we avoid spending resources on multiple variations from the same domain that may not even support posting.

One thing I’m curious about I noticed you mentioned in picture above deselecting the “wild matching” and “deep matching” options in GSA PI. Wouldn’t those improve the identification rate, or do they just waste resources and slow things down? I’m wondering if it’s better to leave them off for efficiency, or if they actually help in certain cases?

I ran a series of tests using engines exported from GSA SER, and honestly, there’s not much difference in output. Disabling bandwidth, wildcard matching, and deep matching just ends up overheating the machine without delivering significantly more recognized links.

Even after testing across all thread levels—from highest to lowest—and comparing both setups (with and without wildcard + deep matching), the results still fall short of what I previously achieved using GSA SER’s platform identifier. So I’m not sure if there’s anything that can be optimized further.

If Sven needs a backup or wants to cross-compare with GSA SER and GSA PI, I’ve got full logs and results ready.

https://prnt.sc/H7iSmPR0axsC