issue about gsa ser and proxies

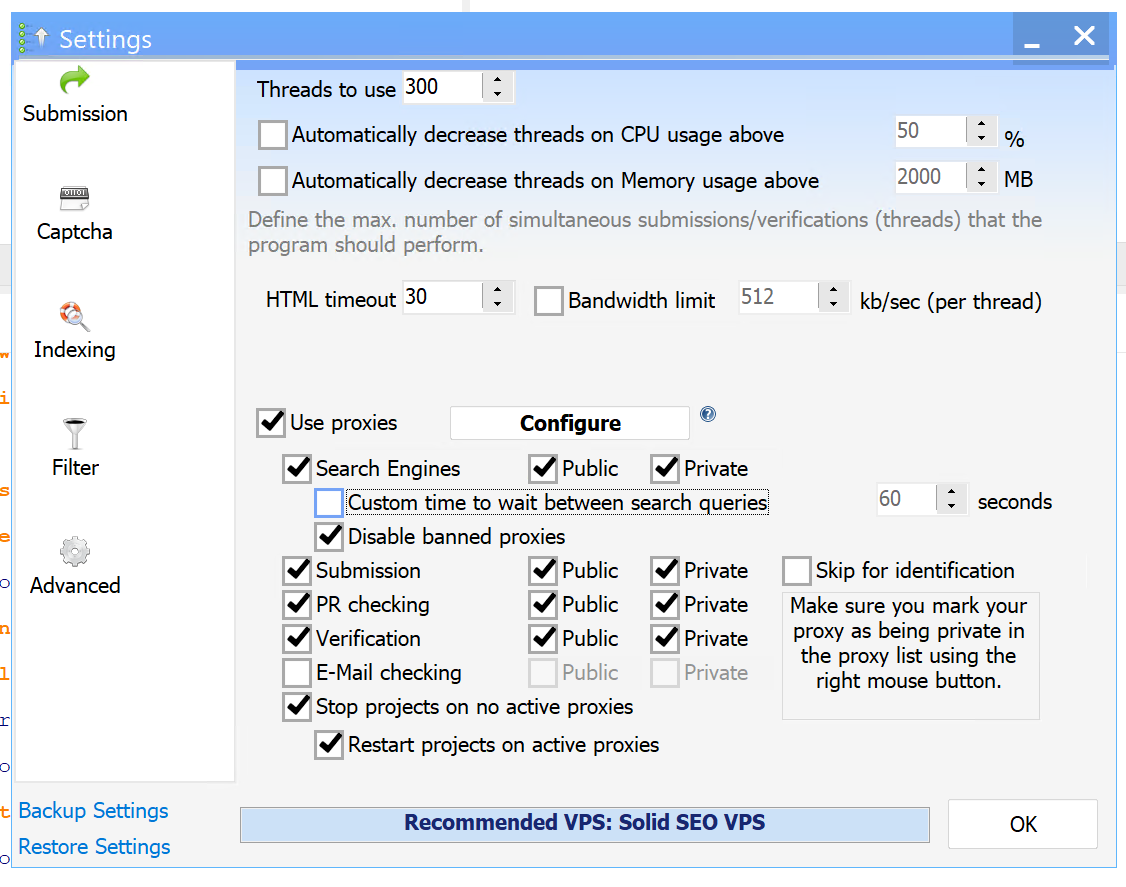

Hi, I'm new to GSA SER and having problems setting it up. I have GSA CB, 2Captcha, and GSA Proxy Scraper. I'm using both private and public proxies for everything except email and i selected only forum and blog comments for testing. I've encountered the following issues:

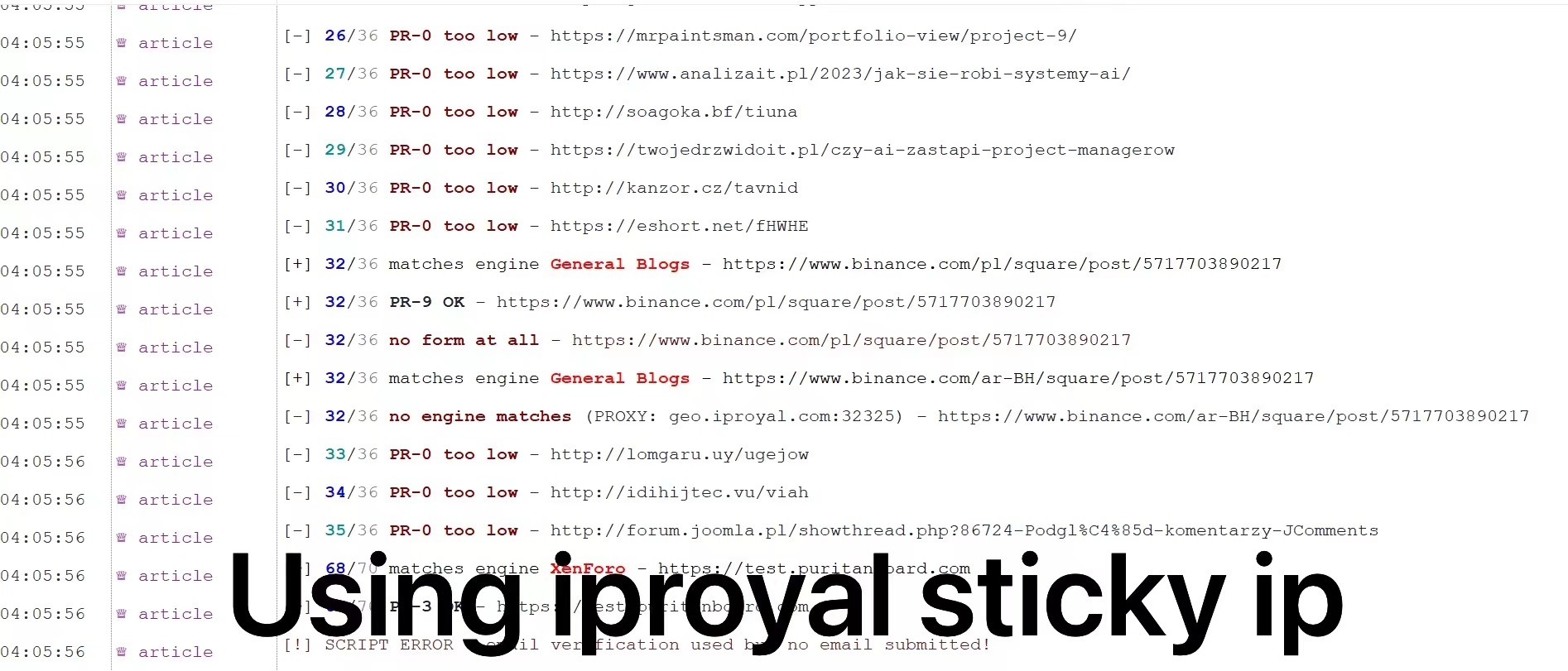

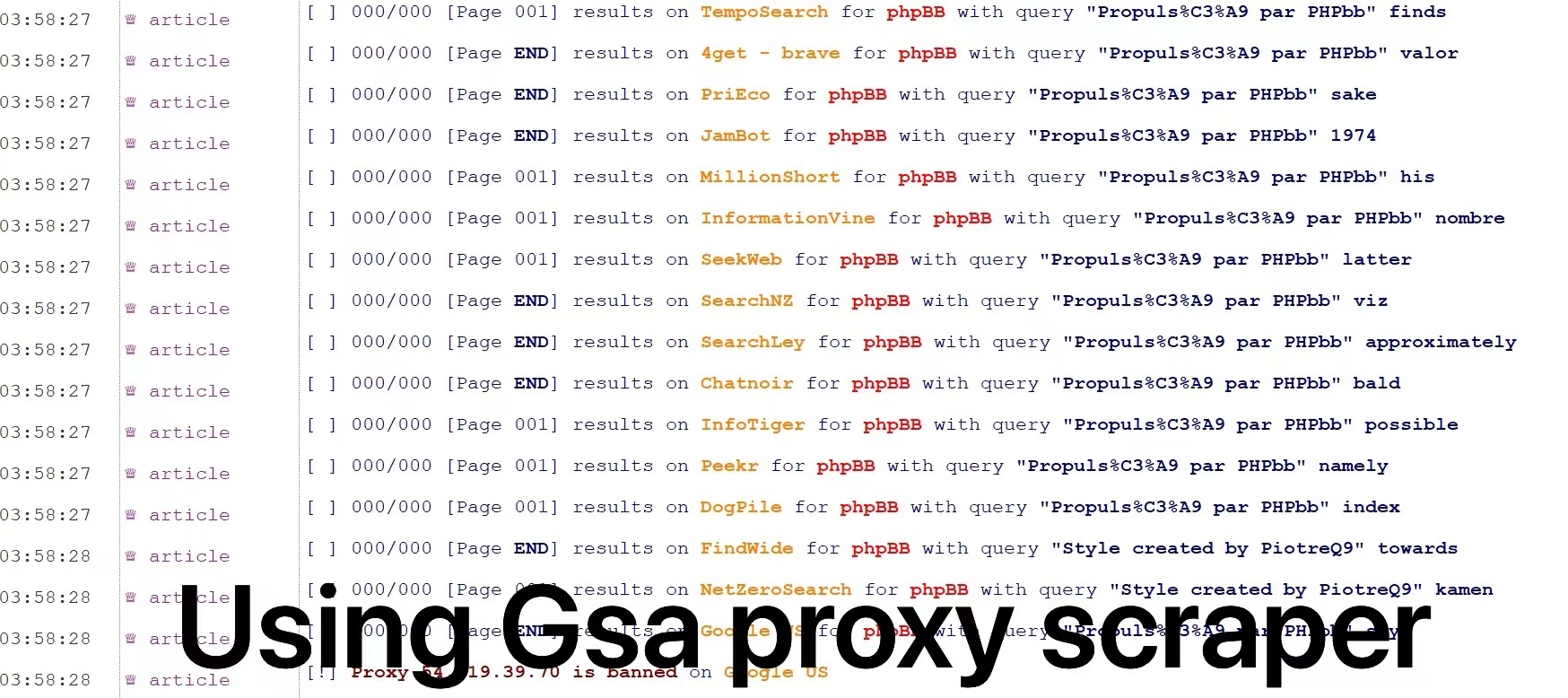

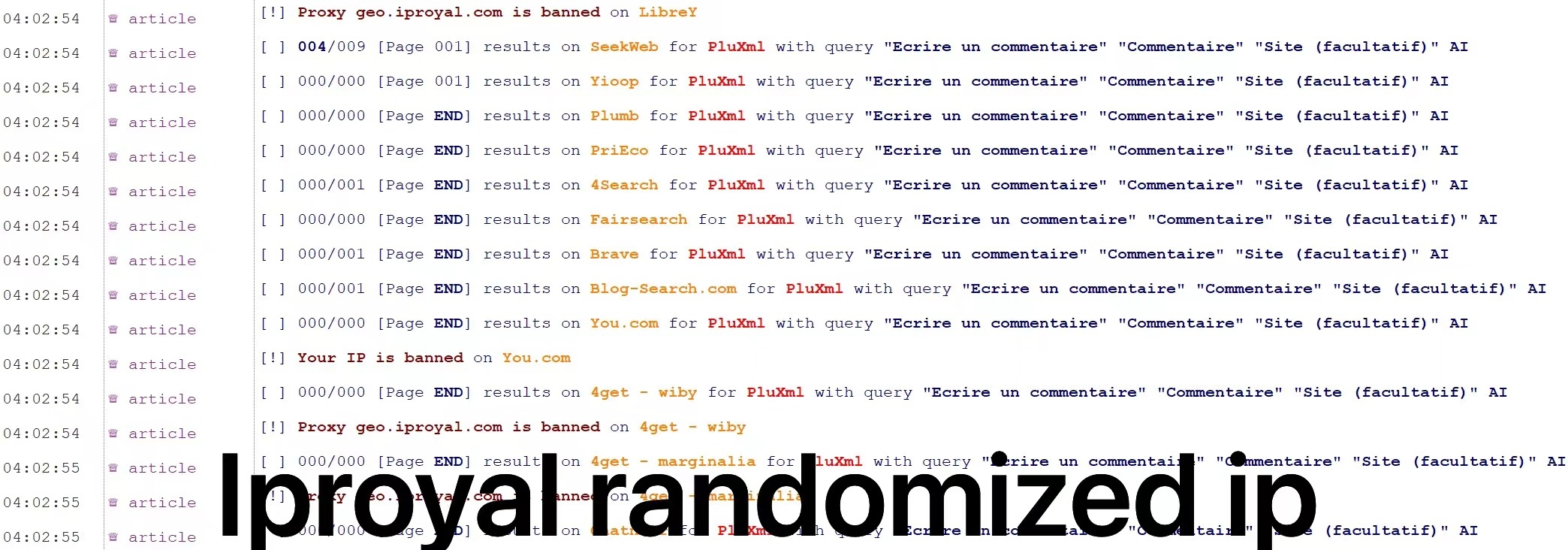

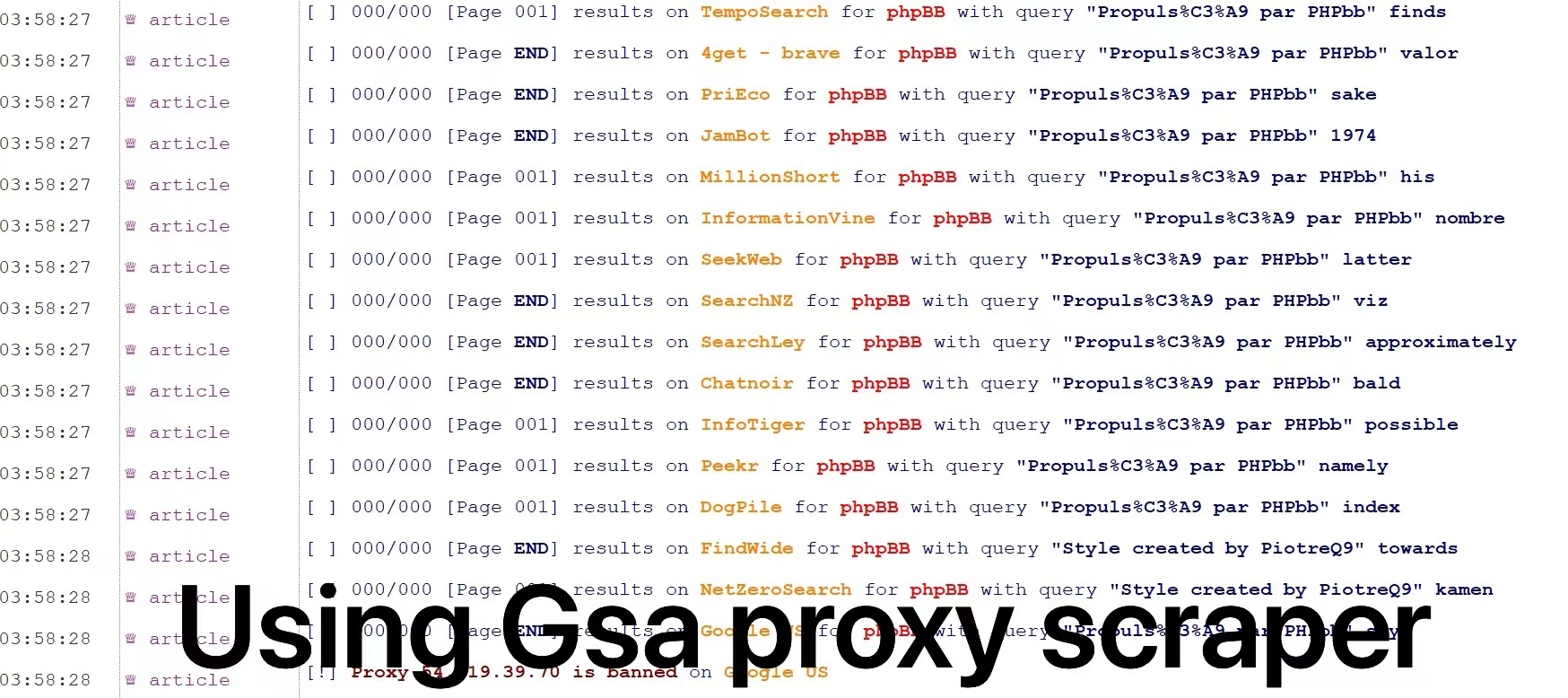

1.Using GSA Proxy Scraper in GSA SER: The proxies work in both SER and the proxy scraper when I test them. However, when I run the project, I often see [000/000] Page END or [000/000] Page 001. Sometimes it shows [000/014] or other numbers, but most are [000/000]. There are rarely any submissions, and no verification links. Why is that?

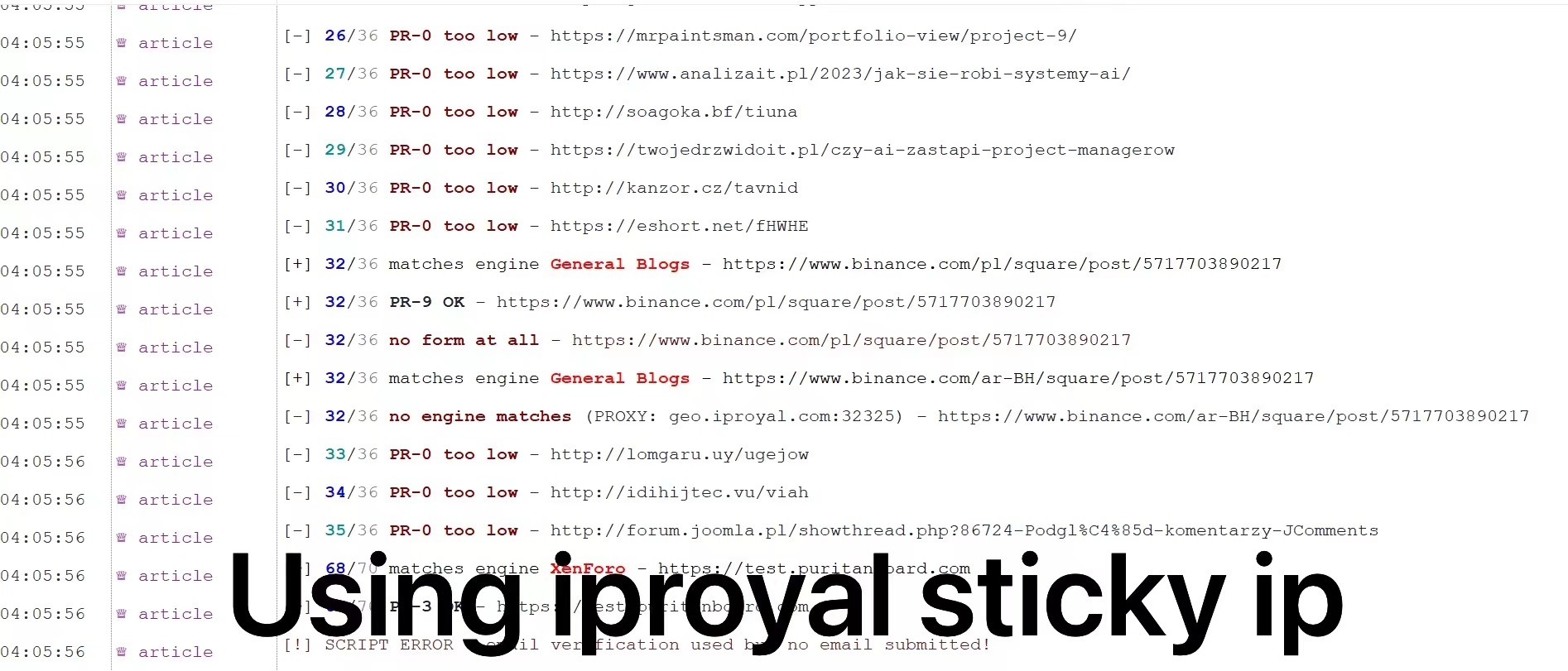

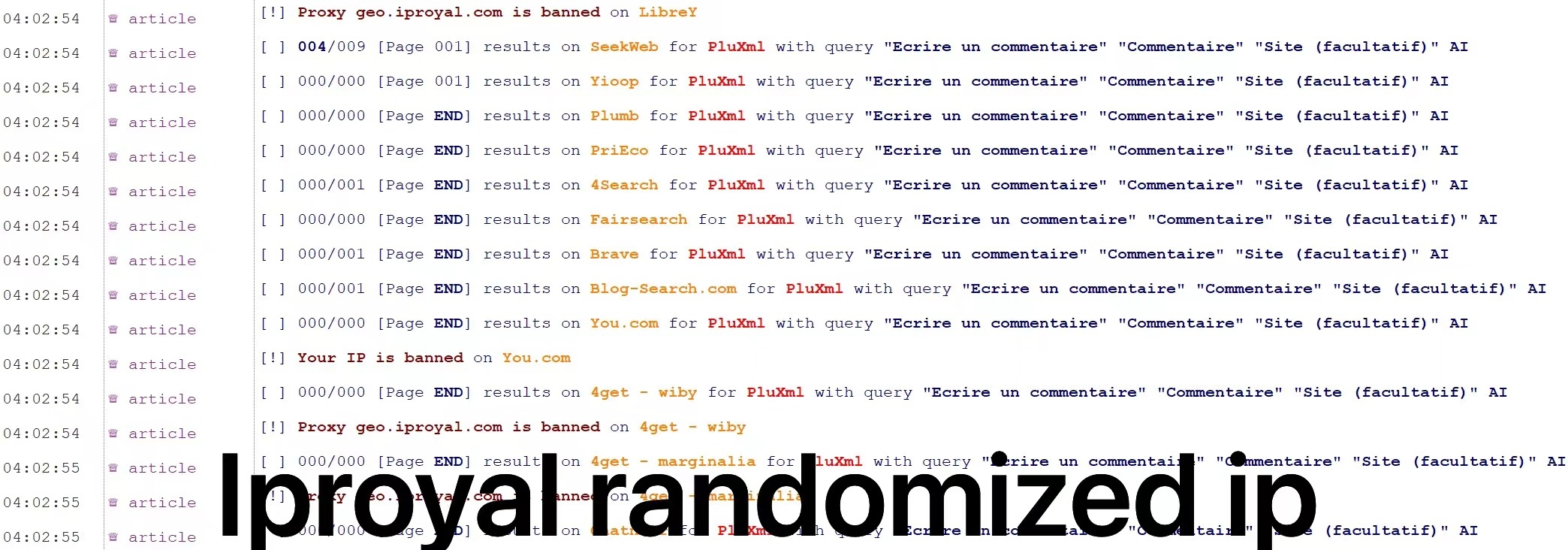

2. Using IPRoyal for Sticky IPs: I get a list of sticky IPs to import into SER. This gives me normal output with messages like PR score, engine match, submission, etc. However, it keeps running until all sticky IPs get banned, and then I need to get another list from IPRoyal. How can I avoid the bans?

3. Using IPRoyal with Randomized IPs: When I use randomized IPs, they get banned instantly after a number of searches. I thought the randomized IP would use a different IP for each request sent. How could Google ban it if it's using different IPs?

Sorry for the basic questions; I'm new to all this. Please help! Thanks very much!

Tagged:

Comments

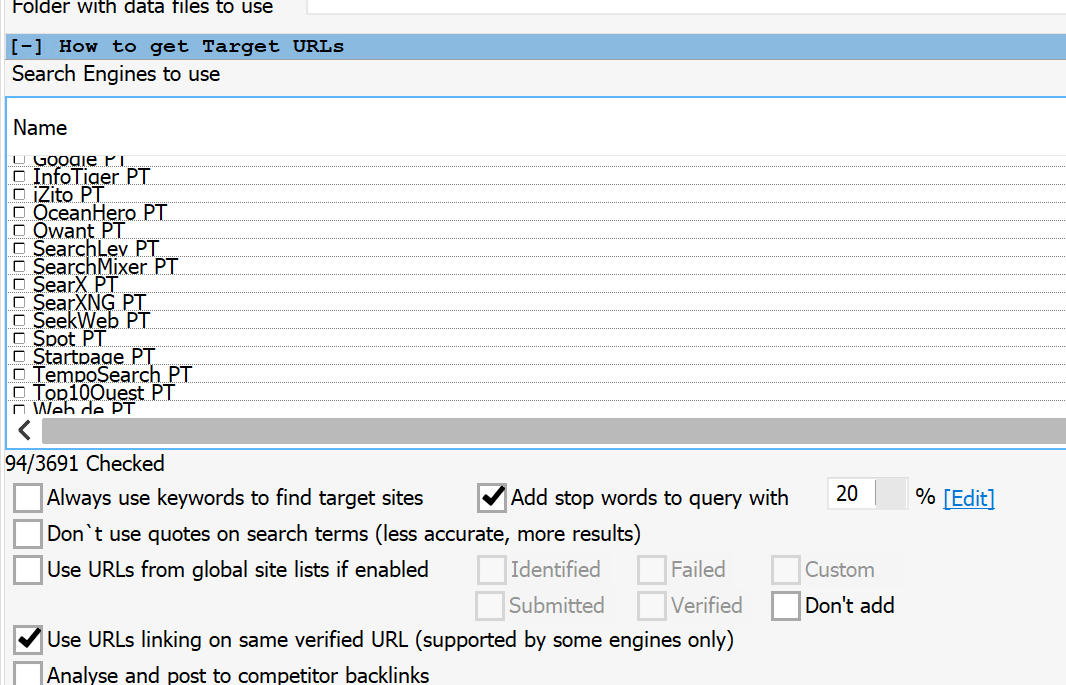

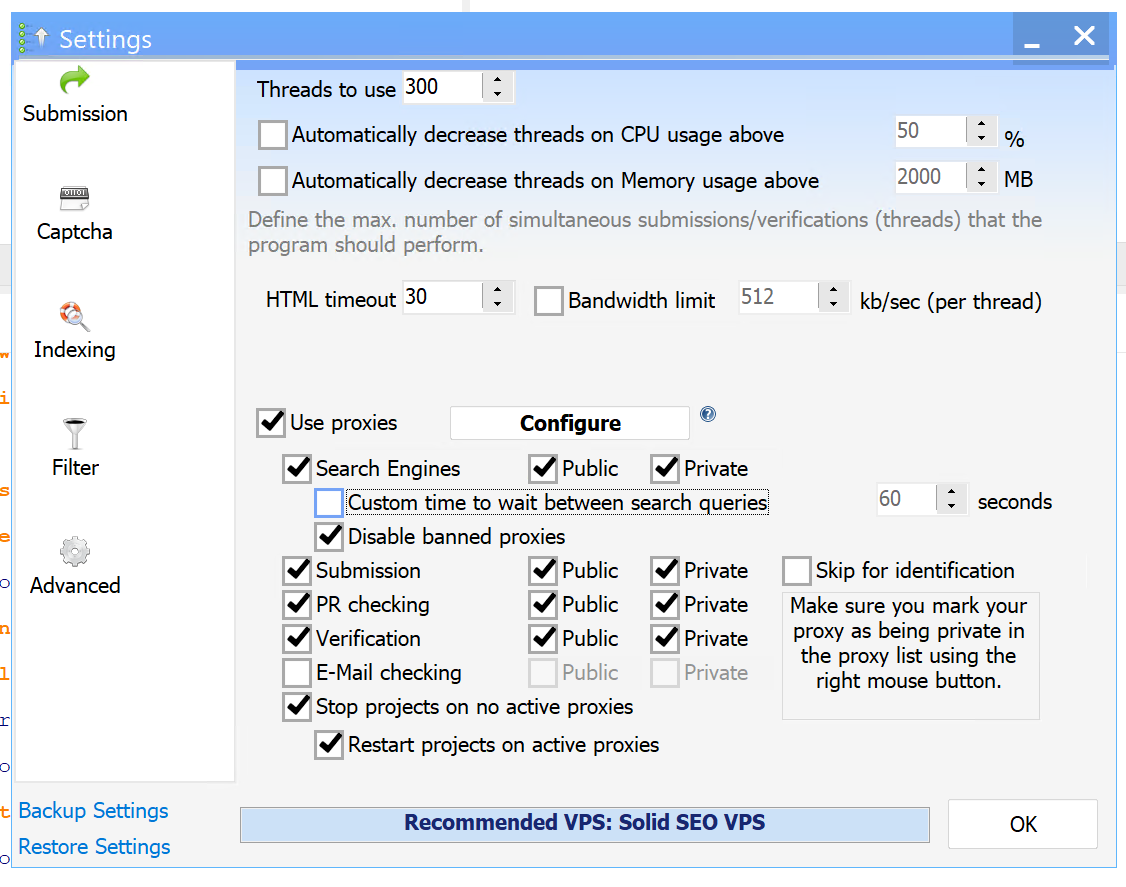

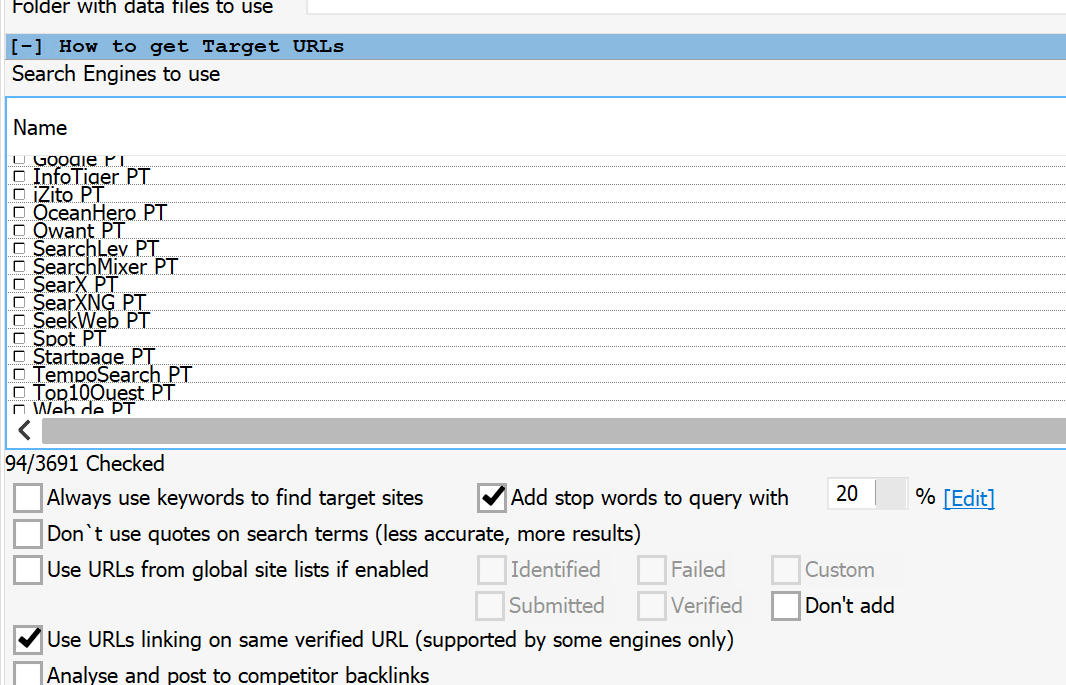

i edited the stop word list and now its all English and i only use English search engines. i enabled the custom time to wait to 60s. Using only use private proxies to search and no proxy to submit. I am getting submissions and verifications now. thanks a lot man.

now i have the following questions:

i still get ban from many engines, below is part of the log:

i mean this is just at the start of the project and i am sending the first request to the search engines. the query looks ok so why am i banned? i ran through the whole log and see there are about 19 search engines that still ban me and the query looks fine. I dont understand. is there other factors affecting the ban?

few more questions:

i know 4get is a search engine, brave is a search engine, but what is 4get - brave? i see many search engines like this.

what does [number1/number2] page number means? forexample:

23:27:46: [ 001/013 ] [Page 001] results on MSN for Unknown Polish Directory with query "URL (http://domena.pl)" "Opis ma"

does this means 1 out of 13 results on page1 get collected?

also i dont understand these numbers

103/167 then 519/547 i dont understand.

thanks a lot for your detailed answer. Its helping a lot

so [ 001/013 ] [Page 001] is collecting search results right? like 1 out of 13 search results was collected on page 1

yes i was expect like 103/167 then 104/167 then 105/167, etc. how does 167 goes to 547, and how does 103 goes to 519? this is not clear for me.

also, why are the last three logs all 521/547 ??