Need a consultation with a professional in GSA. For a fee

I need help with setting up GSA. I already understand the program and basic functions quite well, but the results are still weak

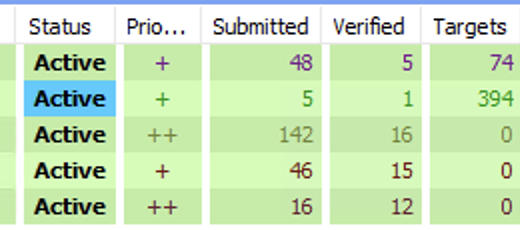

In a few days, I received a maximum of 15 links/project.

I want to receive at least 50-70 contextual links per day for my TIER-2

I use:

Xevil

Good proxies

Spintax content generated with ChatGPT

Powerful VPS

I do not use ready-made listings - I want GSA to find suitable engines for placing links itself.

If necessary, I will buy additional resources.

Please write in PM only if you are sure that you can conduct a consultation, as a result of which I will start receiving a large number of contextual links (at least 50 per day).

I am not interested in any garbage like blog comments, indexers, pingbacks. Only contextual links, not using SER-Lists

Thanked by 1Deeeeeeee

Tagged:

Comments

Contextual do follow links are in gnuboard, wordpress, buddypress, smf, joomlak2, moodle, xpressengine. These are the main ones to focus on scraping.

There are plenty of other engines to scrape but they will either be do follow profile links or no follow contextuals.

As I finally get through my Work To-Do Task List, including SEO Tasks, I find there are a number of GSA-related tasks I need to get to. Probably good to make a new GSA Learning and Doing Task List for these tasks I need to think about but never actually made it to the list!

If you want to make a success of automated link builders, you need to put in the work yourself, and not expect everything to be handed to you on a plate.

You might also need to find and update the 'page must have' footprints on the relevant engines. More info here: https://docu.gsa-online.de/search_engine_ranker/script_manual?s[]=page must have

I think the out-of-the-box setup for GSA-SER is really just a template intended to be highly customized by the user. The footprints, that's the work for each of us to do in our own way, I would guess. That's yet another area that can be approached in different ways.