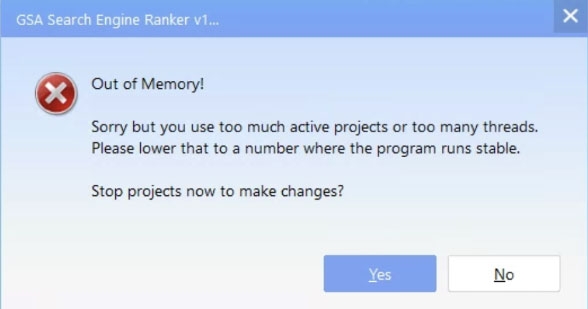

"Out of Memory!"

I keep getting this error every time I wake up. My posting PC has 32gb ram and SER uses at the most maybe... 3-3.5gb? I also use xEvil and I've seen it get up to the very most like... 5gb?

Essentially, this setup (including Windows) is using under 50% of my memory yet keeps throwing this error.

I've seen this posted about in this thread, but there doesn't seem to be any resolution. Is there any way to prevent this? I'm only running at 100 threads.

Comments

I honestly don't even understand how it's using so much memory. I have 3 projects active using search engine scraping/posting and auto-verify. In one project I'm importing large link lists, but other projects are just scraping and posting.

Does GSA have some sort of memory cleanup that executes at some point? Because when I wake up every other day, the program is at 3.2gb memory and only running at 15 threads instead of 200.

As an aside, I remember importing millions of links at once in 2013 across many projects and never saw the error until now.

My server is 28-core/64GB ram, I only use 200 threads because otherwise the memory leak (or issue) happens even faster. The auto-throttle makes my threads go down to 15 after 1-2 days of running, it feels like no memory is being recycled and it sticks at 3.2gb forever. I don't use any the "Analyse competitors" or any other weird settings like that.

https://forum.gsa-online.de/discussion/25965/out-of-memory-error-but-nowhere-close-to-out-of-memory/p1

Which would seem to indicate that it's either...

1) My filter lists (which is 4000 root domains, only 72kb in size).

2) Importing large link lists at one time.

From watching this happen recently, I'm fairly confident it's #2, and for whatever reason GSA is not freeing up the memory after the targets are done being processed/verified.

@Sven let me know if you want me to send you a backup project or settings or something. Thank you

if it is just compiling than why not release 64 bit, i am sure speed will be better.