Am I being too picky when creating backlinks? 24 hours 0 verified...

Hi everyone, I am a new user of GSA SER, I just installed demo version yesterday.

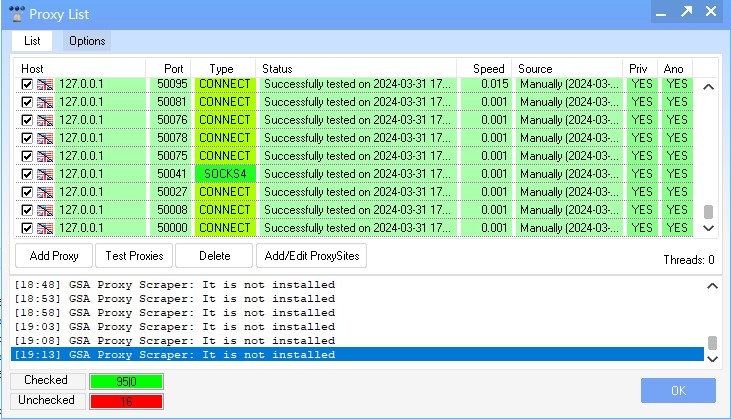

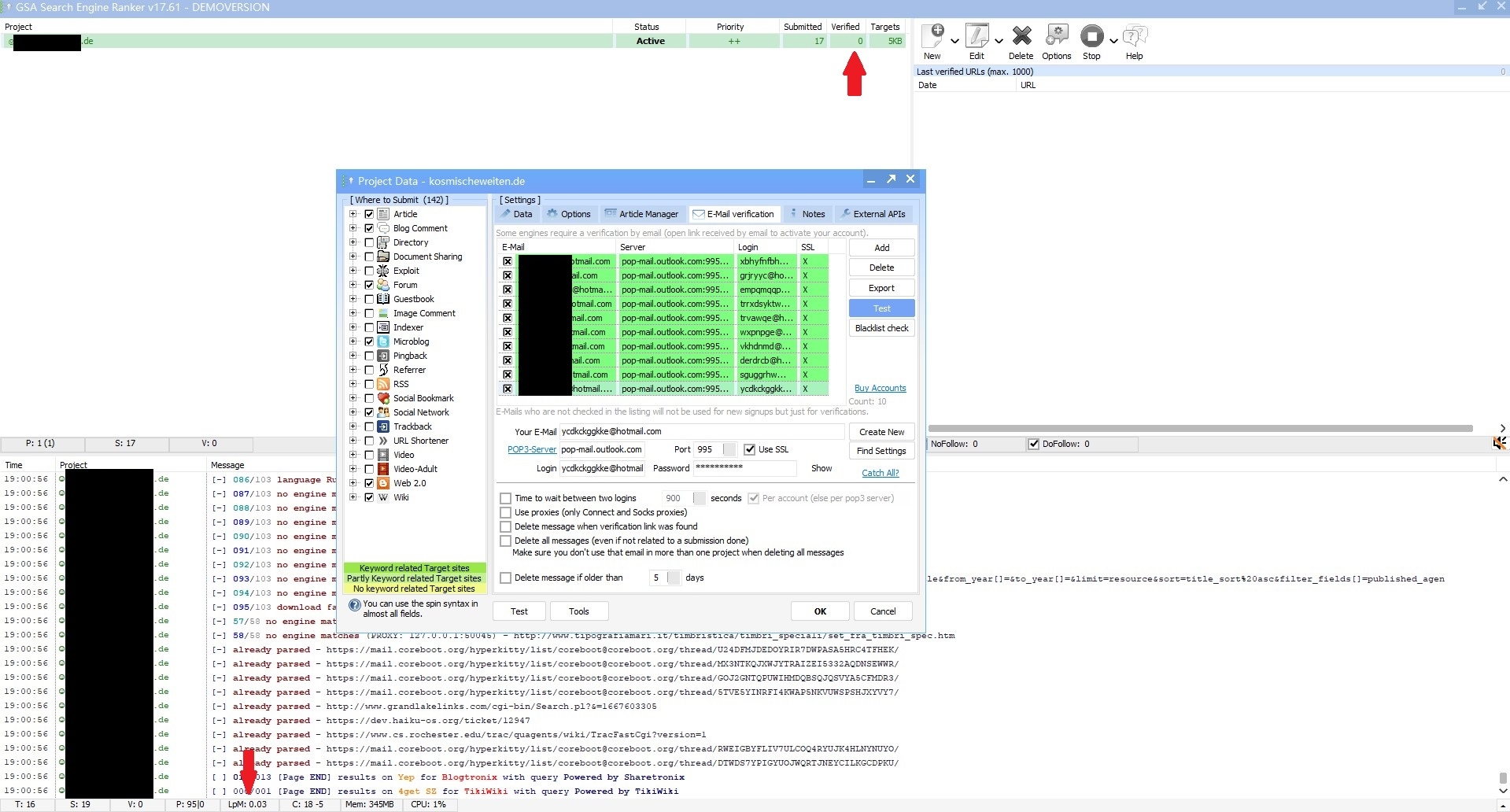

I use 10 emails all tested successful, 95 proxies all tested successful, CapSolver with enough Credits, 200 threads, to run a project with article, blog comment, forum, microblog, social network, web2.0 and wiki.

Search engines only pick German and English, and skip all site from all languages other than German.

Filter URLs didn’t skip any below or above PR, didn’t changed type of backlinks, all as original (untick wiki-article and forum post).

With this settings, I ran the project for almost 24 hours and get only 16 submitted and 0 verified.

I found a very strange point which is I just cost 0.03 dollar in CapSolver, is it normal?

What did I do wrong, did I miss something? Thank you.

Comments

now I have 32 submissions and most of them have a tag"awaiting account verification". I switched the "when to verify" to 1 hour.

I am not so sure what you mean by "I wouldnt just tick off every engine in a group either."

Do you mean in the "search engine to use" tick off everyone?

I don't understand what's the point, my site is in German, I guess use German and English search engines would be enough.

But I am going to listen to you and tick all engines, anyway I have nothing to lose

Personally I seperate the 2 tasks of link building and scraping/testing for working sites. Letting GSA SER do both at the same time is going to make both of the processes run slower.

For scraping, 95 proxies isn't going to get you very far, as search engines that block proxies (Google) will likely block all of those 95 proxies within 24 hours. For link bulding, 95 proxies is plenty.

Sure you can scrape other search engines, but then you have no guarantee that those scraped sites are indexed in Google. You'll need a seperate process in place to check for this. If the sites aren't indexed in Google then your backlinks won't index in Google either. This means your link building will have zero impact on Google rankings.

I feel like seeing Mr.White's angry face

I have switched it back to German and English only.

Have you ever thought of using Hrefer? By the way, do you have any idea what happened to Gscraper? I still use Gscraper, but I don't know whether it's up to date or not. Back in the day, I used to scrape like crazy with proxies from Proxygo (a BHW seller). Lately, Gscraper offered their own proxy server for a huge price, I guess $60/month or something, then it vanished, and now I am only using link lists, no more scraping. It's worth the time for me.