No engines matches - Tried everything in my power.

Devender_Garg

India

Devender_Garg

India

Hello Everyone.

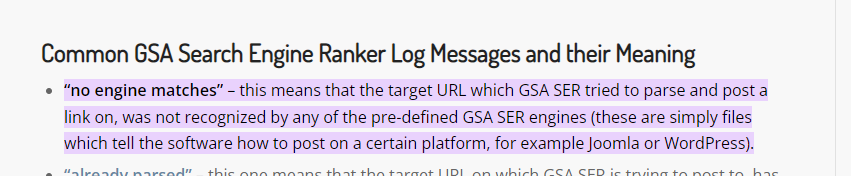

I am stuck with the "No engines matches" Error ... I have done all the possible research and I am going to quote everything. Hopefully I will be able to fix my issue as well as create a document for others having similar issue.

I read all the Forum threads coming with "No engines matches" filters. As well all google results with "No engines matches" query.

https://forum.gsa-online.de/discussion/24402/super-low-verification-on-verified-links/p1

https://forum.gsa-online.de/discussion/367/no-engine-matches/p1

https://forum.gsa-online.de/discussion/22105/no-engines-matches-drupal-please-help/p1

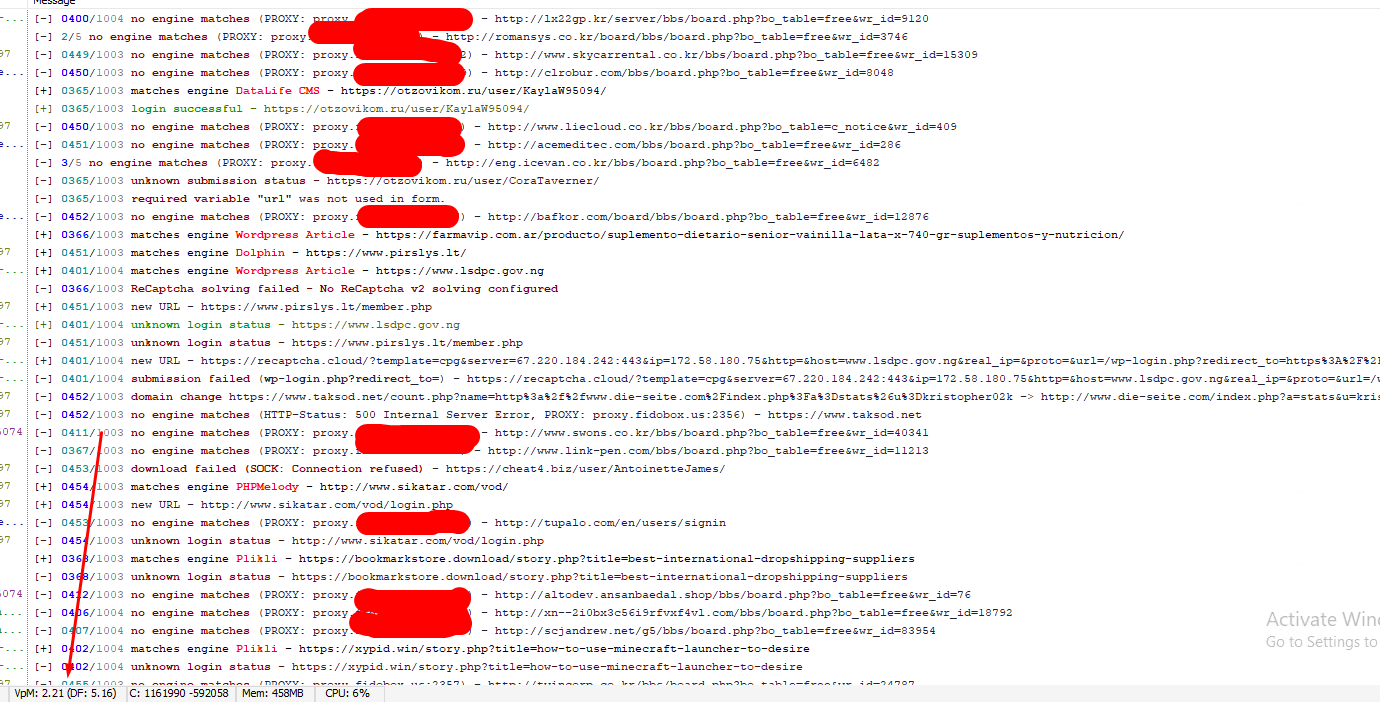

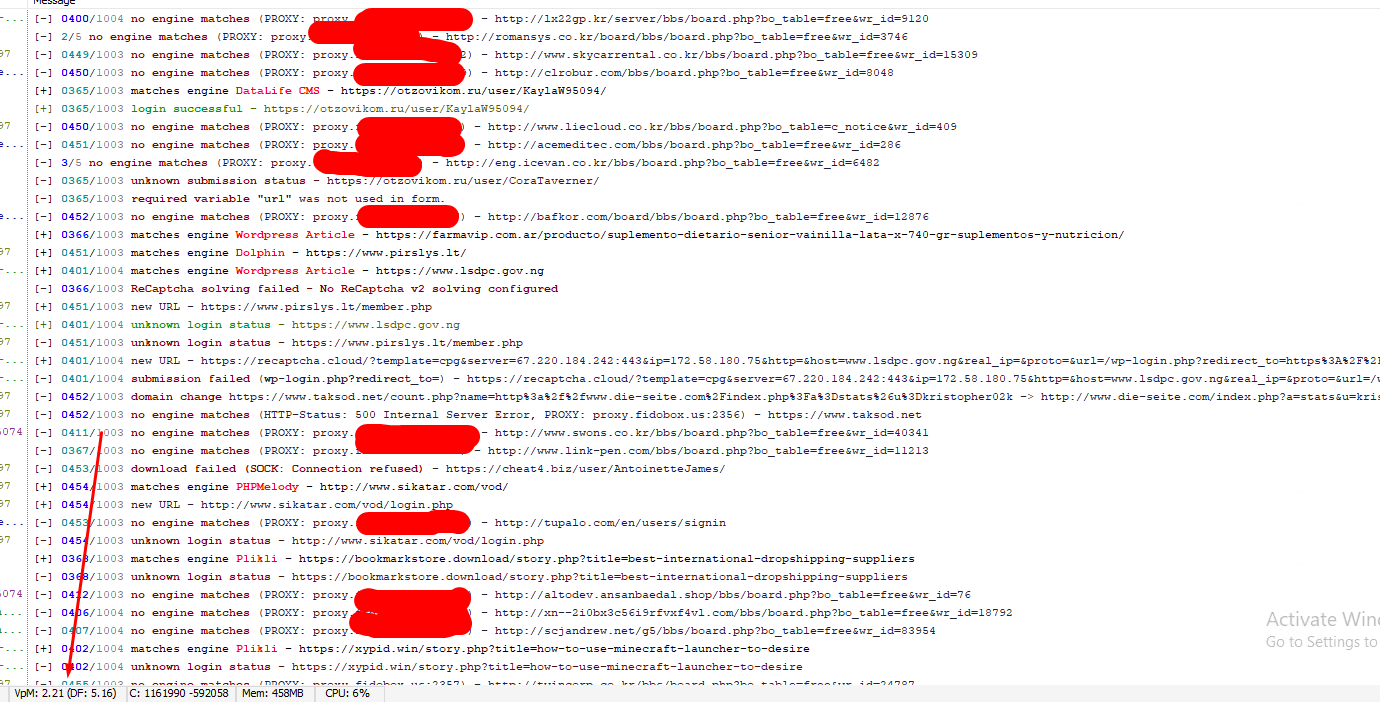

0. Here is what II am getting with 150 Threads, 10 Running projects + GSA reading Veried list.

1. Sites moved or changed

In my case it is not possible because when I open the URL in browser, it opens the links I created couple of days ago. I also tried couple of premium verified lists but same issue. I am not going to name them because the support **** and my objective s to fix my issue.

2. Proxies.

I dont think so. I tried BuyProxies backconnect proxies as well as residential rotating proxies (20 minutes rotation) Super fast.

My Html timeout and number of threads are good as well.

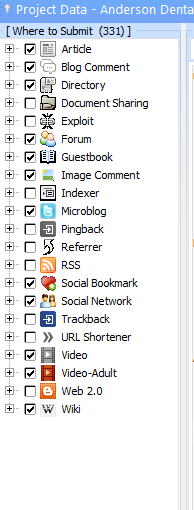

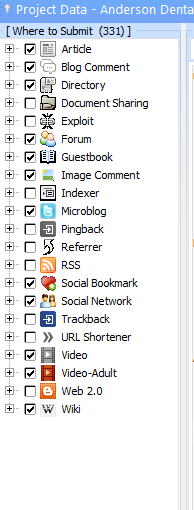

3. I have following Engines checked. I removed all lower quality backlink sources. And I believe it should not be the issue.

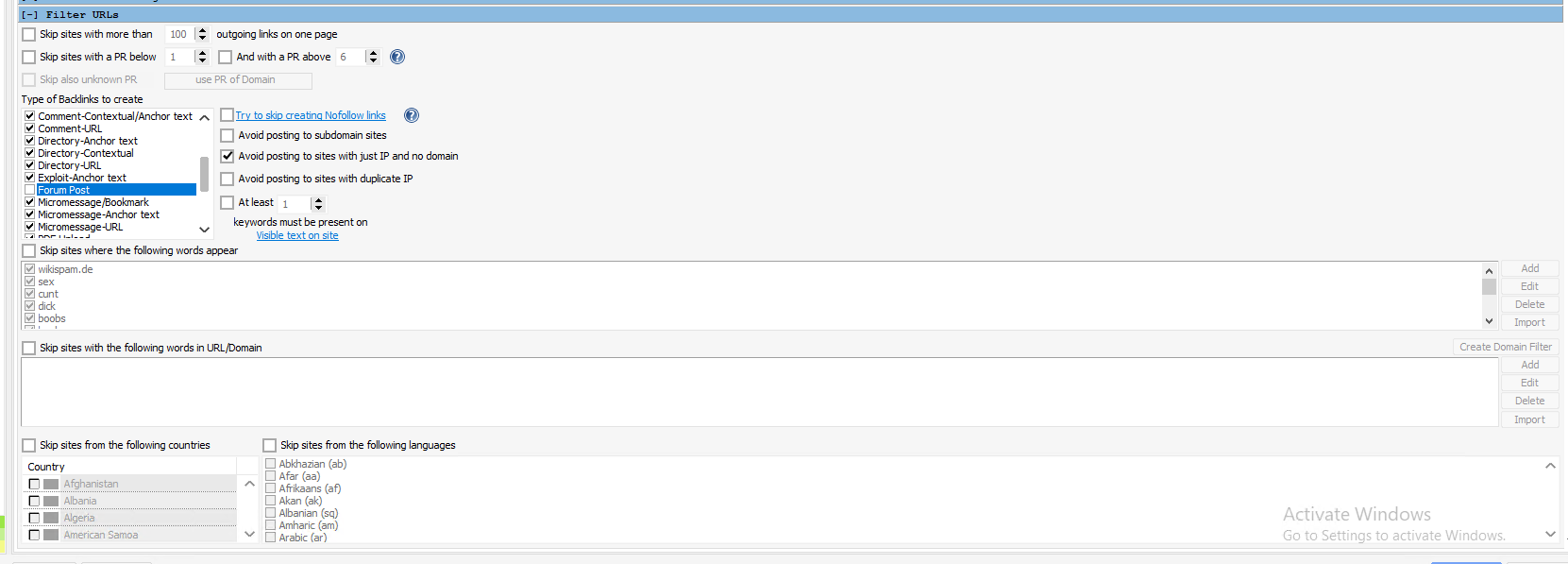

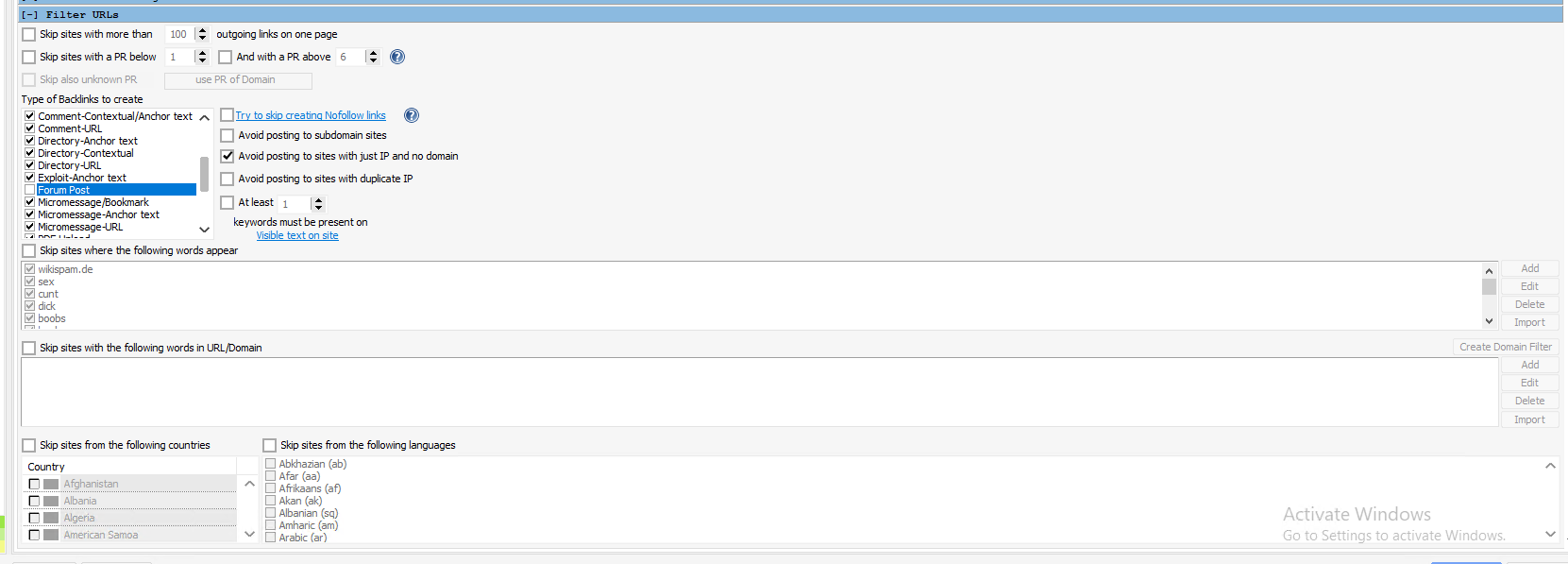

4. I saw @Sven once said that "Type of backlink to create" could be an issue as well. I left it untouched.

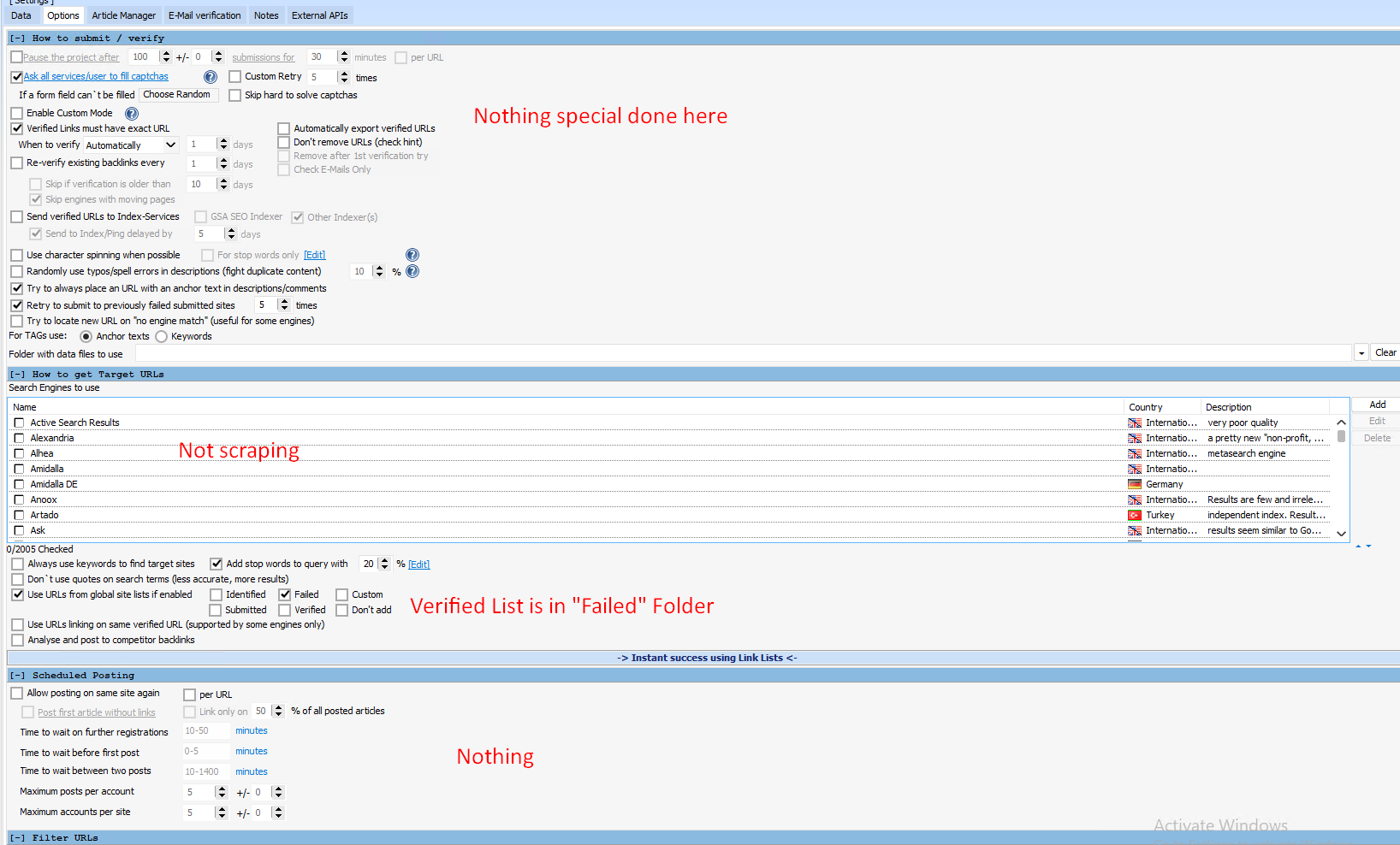

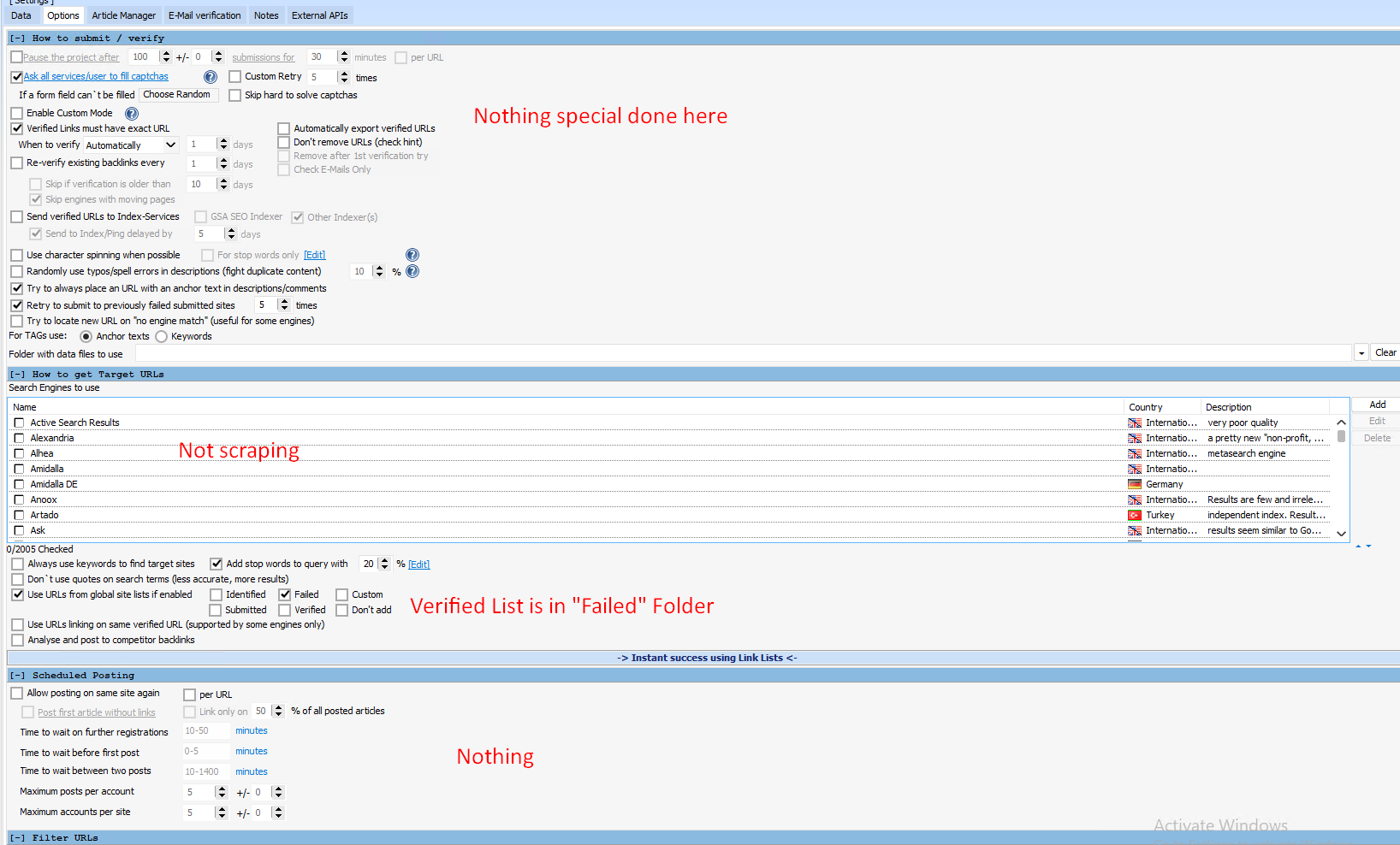

5. Here are other part of Settings.

6. Content is from SEO Content Machine, and preview works fine. Same content is used in SEO Autopilot and elsewhere.

7. I am using my own .com domains set up on my self hosted cpanel. (root server that I use for lots of other stuff)

The same email server works fine with other tools and verifies successfully in GSA SER.

But I feel too few emails into the inbox.

I believe GSA rejecting my verified list and not even trying to create accounts, so fewer emails land.

8. I forgot to mention that I also removed all the Windows firewall and defender-related security, and I never installed an antivirus.

Can someone please look into this and help me get a decent VPM without using the unchecked Engines?

If any more information is required, I will be happy to share it./

Thanks and Regards

I am stuck with the "No engines matches" Error ... I have done all the possible research and I am going to quote everything. Hopefully I will be able to fix my issue as well as create a document for others having similar issue.

I read all the Forum threads coming with "No engines matches" filters. As well all google results with "No engines matches" query.

https://forum.gsa-online.de/discussion/24402/super-low-verification-on-verified-links/p1

https://forum.gsa-online.de/discussion/367/no-engine-matches/p1

https://forum.gsa-online.de/discussion/22105/no-engines-matches-drupal-please-help/p1

0. Here is what II am getting with 150 Threads, 10 Running projects + GSA reading Veried list.

1. Sites moved or changed

In my case it is not possible because when I open the URL in browser, it opens the links I created couple of days ago. I also tried couple of premium verified lists but same issue. I am not going to name them because the support **** and my objective s to fix my issue.

2. Proxies.

I dont think so. I tried BuyProxies backconnect proxies as well as residential rotating proxies (20 minutes rotation) Super fast.

My Html timeout and number of threads are good as well.

3. I have following Engines checked. I removed all lower quality backlink sources. And I believe it should not be the issue.

4. I saw @Sven once said that "Type of backlink to create" could be an issue as well. I left it untouched.

5. Here are other part of Settings.

6. Content is from SEO Content Machine, and preview works fine. Same content is used in SEO Autopilot and elsewhere.

7. I am using my own .com domains set up on my self hosted cpanel. (root server that I use for lots of other stuff)

The same email server works fine with other tools and verifies successfully in GSA SER.

But I feel too few emails into the inbox.

I believe GSA rejecting my verified list and not even trying to create accounts, so fewer emails land.

8. I forgot to mention that I also removed all the Windows firewall and defender-related security, and I never installed an antivirus.

Can someone please look into this and help me get a decent VPM without using the unchecked Engines?

If any more information is required, I will be happy to share it./

Thanks and Regards

Comments

I tried to install on Personal PC as well but same thing.

It seems like it could be security on these sites blocking repeated submissions from the same ip. That's my best gusess. I can still make links on this platform so there doesn't seem to be anything wrong with how the engine is coded.

But running the same verified links from a completed project on a new project still gives the "no engine matches". Not on all the sites but on some of them.

I am testing different things that might cause trouble. I also put my DropBox outside the equation to test.

The irony is, none of the premium list providers are willing to help with this kind of issue and you guys willing to help. They probably need a strong competitor.

&wr_id=.*$

It looks like all the urls in your screenshot with &wr_id= are dead, so nothing to identify, whereas if you remove that parameter you will see the actual forum page, which can be identified as a gnuboard site.

I created a project in GSA PI to trim to root and remove duplicate domain (TTR RD) from my identified URLs (Also from GSA PI)

Now all my lists looks like this

I also did this to my running campaigns before firing the above TTR RD List.

Can I still see this kind of URL in No Engine Matches?

I have no idea from where these URls are coming.

1. No Scraping engine was selected in GSA SER

2. Not doing any "competitor URL link scraping and link building" inside target options

3. ONLy and Only TTR RD list selected.

Now I am 99% sure that GSA is not being fed from what I am trying to feed it. It might be my mistake OR a bug. Please someone carries this research from here.

@Sven can you please help

I kept the "Use URLs linking on same verified URL (supported by some engines only)" unchecked as I am just testing the verified List OR GSA PI output.

But when I constantly got No Engine matches, then I tested it with or without trying to locate a new URL on "no engine match" (useful for some engines) both.

Here is my current screenshot.

I double-checked the settings, cleared the target URL cache and history and ran again. Below is a quick recap,

With 7 Projects and 150 Threads, I am getting 1-3 LPM.

Most of the things I see in logs are NO Engine matches.

Very low Download fails (30-35) using BuyProxies semi-dedicated proxies.

Also tried residential 30-minute rotating proxies but no improvements.

Emails are fine,. My own .com domains are hosted on Cpanel .. no firewalls and blockers... works fine with SEO Autopilot.

GSA PI recognized thousands of URls and it is extracting external URLs from verified links so it must give me way more than 2 LPM.

I have a separate server to run PI and sync the list BUT for now, I just want to see 10LPM to ensure my setup is fine before I increase variables.

Where is the hack I am lacking?

Whatever is coming, I don't have a way to check if it is coming from PI output or not... But It seems like it is not coming from PI output.

I know it is too much to ask but I am ok to provide RDP access in DM and will be available in DM to revert any query you might have when diagnosis. Sven said:

Please consider checking out my server.

If anybody using GSA PI successfully with GSA SER and not getting all over "No Engine Match," and if he wants to help, I would love for his time as well.

just a single batch for you.

If these links are being picked from site lists, then they must be GSA PI verified.

But as long I believe, These URLs are coming from nowhere and don't come from my selected SiteList.

https://xn--v52b2zd5t6jbib523m.com/bbs/board.php?bo_table=free&wr_id=46342

Right now, I am doing "Use URLs linking on same verified URL (supported by some engines only)"

And I am getting 20-25 LPM but all of them comments

Now I understand why I am not getting many of other type of verified submissions.

Any further help please,

Same proxies are giving me 30 LPM with blog comments.

Using Semidedicatyed from BuyProxiies

as well as 30-minute rotating proxies residential.

I am going to send you (in DM) a project backup with content and everything

As well as my proxies which don't have an IP restriction to diagnosis

Thanks

I just want to fix an engine so that SER won't identify Just use the fixed engine script and submit, I think it will help "no engine matches problem" Because many times SER won't identify the previously verified list.

hxxp://aanline.com/kor/board/bbs/board.php?bo_table=free&wr_id=445549#GnuBoard