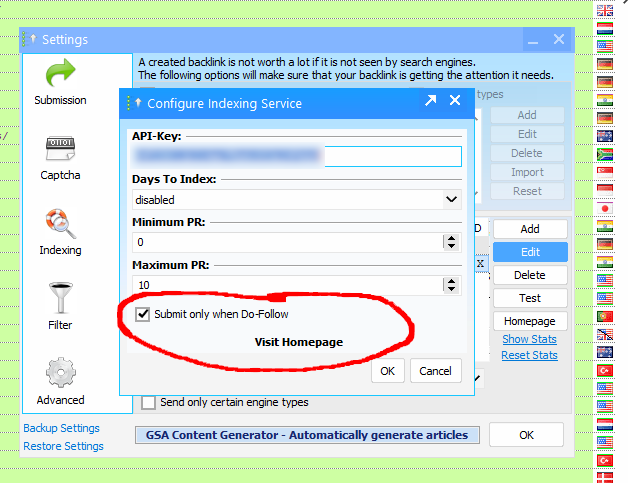

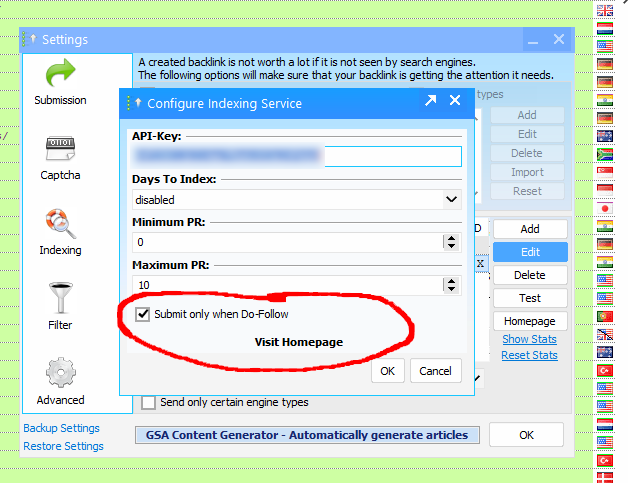

Additional filter for indexing services

cherub

SERnuke.com

cherub

SERnuke.com

I noticed yesterday that despite noindex tags being stored with verified link information ('flag 0') these urls will still get sent to indexing services. Sending a noindex page for indexing is a waste of resources, so could we get an additional checkbox in indexing options to choose not to send them?

Tagged:

Comments

An option either way would be great

in the 'days to index' section of the main program global options...is that the same function as the project specific option of 'send to index/ping delayed by'? and if so, will the project specific options always override the global option?

But could we somehow integrate the noindex detection being used for existing urls before sending them to indexers or adding them to a tiered project.....could we use that to send those particular websites to a blacklist to avoid posting to those sites again in the future?

So my article or profile url with my content/links on it could be set to index/follow, but it could still be unindexable by what the main domains robots.txt file says for those particular types of pages sitewide or am i still misunderstanding?

this ties into the other thread about wanting the function to allocate different indexing services on a per project/link type vs just global levels