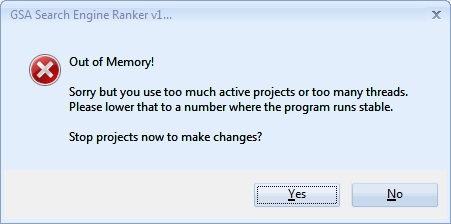

"Out of memory" error... but nowhere close to out of memory

Hi,

Since the most recent version of SER, I'm getting this message every day on a server I leave running 24/7.

This server has 16gb memory. SER is currently running at only 3gb. Total memory used by SER, CB, and all OS functions is only 5gb. The memory isn't even 50% used, yet I'm getting this error every day.

Any idea what could be causing it?

SER is only running at 75 threads. I've recently run this same server on 150+ threads without any problems.

If I press "no" I get the SER crash dialog.

It seems one of my recent updates resulted in a memory leak, that's the only thing that could explain this happening... I'm watching the memory now, after 5 minutes of running, SER is fluctuating between 200-300mb. How it goes from 300mb, to 3gb with the same projects running is the problem.

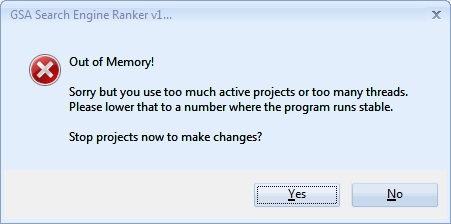

Since the most recent version of SER, I'm getting this message every day on a server I leave running 24/7.

This server has 16gb memory. SER is currently running at only 3gb. Total memory used by SER, CB, and all OS functions is only 5gb. The memory isn't even 50% used, yet I'm getting this error every day.

Any idea what could be causing it?

SER is only running at 75 threads. I've recently run this same server on 150+ threads without any problems.

If I press "no" I get the SER crash dialog.

It seems one of my recent updates resulted in a memory leak, that's the only thing that could explain this happening... I'm watching the memory now, after 5 minutes of running, SER is fluctuating between 200-300mb. How it goes from 300mb, to 3gb with the same projects running is the problem.

Comments

I will try the "Delete unused accounts" because it could be related, as these are "scraping template" projects that have been re-used (cloned + re-used) many times.

Before starting a new one (after cloning), I always do "Delete Target URL History," but I've never done "Delete unused accounts".

Will let you know.

Edit:

After ~15 minutes of running, the memory usage has gone from 250mb -> 800mb. Will see if it crashes later on.

Barley any links have been submitted or accounts created. I leave these projects running with the SER search engine scraping running with all search engines (besides Google) enabled.

Could it be related to the search engines / scraping? I've been using this exact method for 3+ years and never had a problem, never seen anything like this error... so it would've had to be something recent that changed to cause this.

I do have a large list of "don't post to domains containing" on some of the projects, but I don't see how that would cause such large memory usage increase... is there any way to see which projects are causing the issue?

Could you try adding ~30k domains to your negative list and see if it causes the same issue for you?