Super-Low Verification on Verified Links.

SheilaL

chicago

SheilaL

chicago

Hello,

I scraped a list of 10k urls from SB that I later verified in GSA. But now I'm trying to build to them and currently my Submitted is at 400, and Verified is only at 30. Can someone tell me why this is?

BTW, I have my global options setup pretty generically to allow for a high LPM. Most common msg is "no engine matches".

Sorry if someone has asked this before, but I can't seem to figure out the issue.

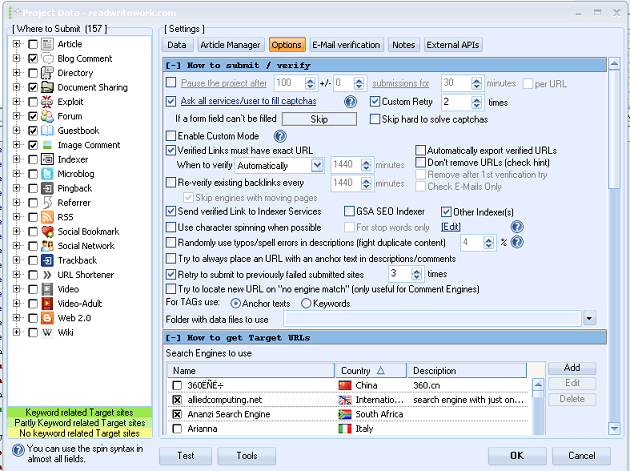

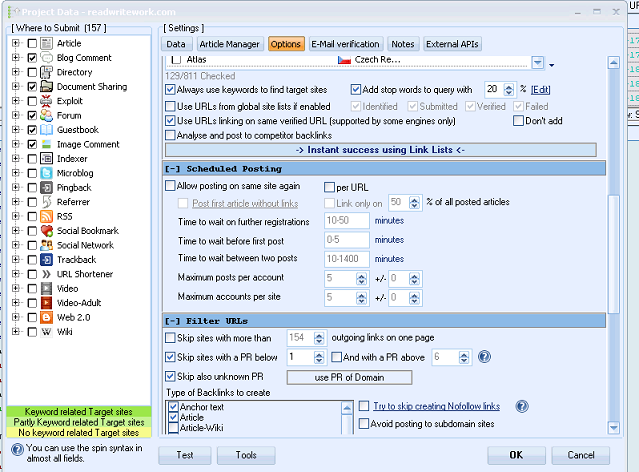

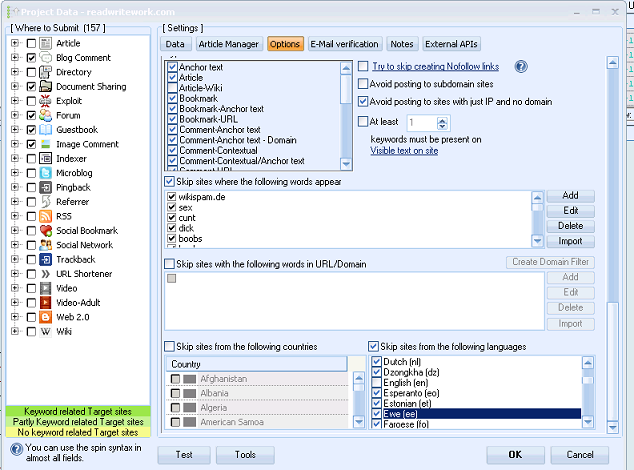

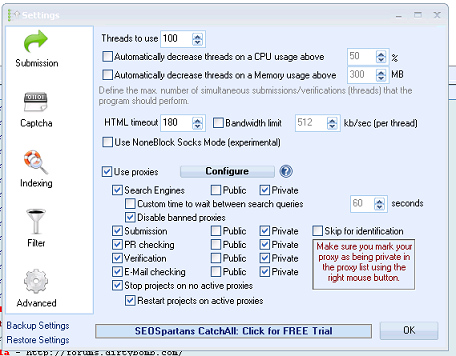

Settings:

Comments

Beside this little glitch, I don't see much being wrong right now.

Is this what is happening? Or is it something else?

If so, those ARE still successful postings, just not ones we can verify. I guess the implications then are that we can't build tiers with them, either, but maybe we wouldn't want to b/c our posted backlink and comment moves down from page to page?

@Sven, could it also be that although those sites were initially found using valid footprints, the form page had been modified, even in a small, but crucial way?

Therefore, the sites would be found, but SER wouldn't be able to post to them.

@ShielaL, you stated that these were, in fact, verified with SER? So this confuses me...did you already successfully verify links to them on a junk project? Just trying to learn and help at the same time.

secondly uncheck anything regarding PR cuz it not working anymore ,, mostly (95%) wrong , just use moz for free to check the site pr or why bother its a numbers game so just keep posting and u will rank evantully if u use good strategy.

thirdly dont send to gsa indexer and redirector cuz it will cause some delays , when ever they reach 10000 send them in bulks to those softwares and dont send all links cuz u dont want google to index all ur site in one time . U have to do irregularity as much as possible.

also use a good captcha solver. I would recommand xevil as first line and gsa cb as second line and from within gsa cb let it send the failed again to xevil so it have another round with them . And that mustly wont happen cuz xevil will solve them on first line

Only one discount i found for it : http://www.botmasterlabs.net/xrumer/?p=XRUMERDiscounte

then u need to use private proxies for submission and search engines only. Leave all others without proxies , also use solidseovps , they are crazy fast and i really suggest u use there 10gbps new vps, i build over half million backlink daily in xrumer and about 200k in gsa ser and i dont manage to use half of the network power